| URLs in this document have been updated. Links enclosed in {curly brackets} have been changed. If a replacement link was located, the new URL was added and the link is active; if a new site could not be identified, the broken link was removed. |

Shifting Sands: Science Researchers on Google Scholar, Web of Science, and PubMed, with Implications for Library Collections Budgets

Science and Engineering Collections Coordinator

christyh@ucsc.edu

Science and Engineering Librarian

caldwell@ucsc.edu

Santa Cruz, California

Abstract

Science researchers at the University of California Santa Cruz were surveyed about their article database use and preferences in order to inform collection budget choices. Web of Science was the single most used database, selected by 41.6%. Statistically there was no difference between PubMed (21.5%) and Google Scholar (18.7%) as the second most popular database. 83% of those surveyed had used Google Scholar and an additional 13% had not used it but would like to try it. Very few databases account for the most use, and subject-specific databases are used less than big multidisciplinary databases (PubMed is the exception). While Google Scholar is favored for its ease of use and speed, those who prefer Web of Science feel more confident about the quality of their results than do those who prefer Google Scholar. When asked to choose between paying for article database access or paying for journal subscriptions, 66% of researchers chose to keep journal subscriptions, while 34% chose to keep article databases.

Introduction

Keeping a finger on the pulse of user behavior is essential for the efficient allocation of scarce library resources. Many academic librarians world-wide are dealing with budget reductions (CIBER 2009) and are trying to make cuts that least affect their users. The University of California Santa Cruz (UCSC) is experiencing budget reductions in both collections and services. In the area of collections, librarians have many opinions regarding the importance of continuing to pay for articles versus the importance of keeping the tools used to find those articles (such as article databases). One of the assertions stated by librarians and faculty alike is that, "all researchers use Google Scholar," and that, "soon they won't use licensed databases." The literature reports that usage of fee-based A&I databases is in decline, or even in a "death spiral." (Tucci 2010). Indeed, usage statistics for many of the subject-specific databases on the UCSC campus have been decreasing year after year. Ben Wagner (2010), a librarian at the University of Buffalo, made a statement that rings true: "We are running a gourmet restaurant, but all our patrons are flocking to McDonalds." If so, libraries could cancel some of their "gourmet" databases in order to save some journal subscriptions. But is it really true that science researchers would be satisfied if their access was limited to only free article databases such as Google Scholar and PubMed? Are researchers aware of the quality issues present in Google Scholar? Peter Jascó (2009, 2010) has documented several metadata quality issues with Google Scholar. Jascó points out that due to a number of production choices Google Scholar has made, nonsense author names, incorrect publication years and missing authors have been generated in large numbers which have had a disastrous effect on the accuracy of the times cited data in this database. As Jascó (2010) concludes: "[Google Scholar] is especially inappropriate for bibliometric searches, for evaluating the publishing performance and impact of researchers and journals". Google has cleaned up some specific instances of bad data but has not addressed the systemic problem.

In order to inform the choices librarians must make when significant collection budget reductions are required, the science researchers at the University of California Santa Cruz campus were surveyed about their article database use and preferences. A survey was selected because usage statistics from PubMed and Google Scholar are not provided, data from a library's link resolver may underestimate usage when full-text is available without link resolver intervention, and because qualitative as well as quantitative data was needed. The goal was to discover which article databases science researchers prefer and why, how much Google Scholar and other free article databases are being used, how use and preferences for Google Scholar compare to those for Web of Science (one big costly competitor to Google Scholar) and how researchers would react to being given a choice between spending scarce library resources on keeping article databases or on keeping more journal subscriptions.

Methods

In mid October of 2009 an e-mail invitation to participate in an online survey was sent to the science and engineering departmental e-mail lists at the University of California Santa Cruz. These e-mail lists are designed to reach full-time and part-time faculty members and lecturers (estimated total population 252), graduate students (estimated total population 829), staff and post docs (population unknown). Library employees were not eligible to participate. The survey was open for 18 days and ended on October 31, 2009. A copy of the survey questions is provided in the appendix. An incentive for participation was offered which may have increased the response rate. Those who participated were entered into a random drawing for one of two $100 Amazon.com gift cards. The gift cards were purchased using library research funds; no library materials funds were used for this project. A total of 220 survey responses were received in the following categories: graduate students 44.5% (98), faculty 28.6% (63), staff researchers 9.1% (20), postdocs 6.4% (14), undergrads 1.4% (3), self-identified as "other" 1.8% (4), unidentified 8.1% (18). These numbers represent a 25% survey response rate from science faculty, and an 11.8% response rate from science graduate students.

The survey was administered and the survey results were analyzed using the professional version of the SurveyMonkey software available from www.surveymonkey.com. In addition, the calculator from Creative Research Systems available at http://www.surveysystem.com/sscalc.htm#factors was used to obtain confidence intervals appropriate for the sample sizes in order to assess the statistical validity of the percentage differences seen in the results. For the survey population as a whole a confidence interval of 7.2% was used, and other confidence intervals for subsets of the population were obtained using the calculator as appropriate. Contingency table analysis was used to analyze the relationships between various categories of the data (to compare the results from faculty vs. graduate students, for example) to determine if they were statistically significant. The online contingency table calculators available from the physics department of the College of Saint Benedict and Saint John's University at http://www.physics.csbsju.edu/stats/contingency.html were used. The probability (p) of a statistically significant association is reported in the captions of the figures; if p is a very small number (indicating a very strong association), then p < 0.001 is indicated.

It should be noted that during the study period the UCSC campus had a trial subscription to the multidisciplinary Scopus database, but it had not been marketed heavily. The lack of mention of Scopus in this study is not meaningful.

Results & Discussion

Database Use in General

Science researchers are still using article databases heavily. More than half of the researchers used them daily (52.3%) and another third used them every week (33.2%). This magnitude of use is supported by the high number of searches performed by UCSC researchers in the Web of Science database. This relatively small campus (with a student population of about 16,000) generated an impressive 246,883 searches in the Web of Science database in 2009.

Librarians' concern that researchers may be overly dependent on any one database (and thereby possibly missing crucial citations) may be unfounded. The survey showed that researchers used an average of 2.55 databases each, and this average number was consistent across the science disciplines and among both faculty and graduate students. Comments were also received about using more than one database consistently, or using different databases for different purposes.

Multidisciplinary vs. Subject-specific Databases

Because of the increasing focus on interdisciplinary research as well as the ever-present need for speed in all areas of science research, the hypothesis was that easy to use multidisciplinary databases like Google Scholar would be preferred over subject-specific databases like SciFinder Scholar or BIOSIS. This preference was supported by the survey results. When asked to choose the type of database they used the most, multidisciplinary databases were preferred (59.9%) over subject-specific databases (32.2%), publisher-specific databases like Science Direct (4.1%), or preprint databases like arXiv (3.2%). Looking at faculty alone, 60.3% of faculty preferred multidisciplinary databases, while only 23.8% preferred subject-specific databases. The one significant exception to researchers preferring multidisciplinary databases was in the case of the biologists' preference for PubMed.

This preference for multidisciplinary databases among the study population as a whole and among faculty in particular is the opposite of one of the findings of the most recent Ithaka report, which surveyed faculty nation-wide in all disciplines, including responses from 791 science faculty. The Ithaka study (Schonfeld et al. 2010) found that faculty "tend to prefer electronic resources specific to their own discipline over those that cover multiple disciplines" when starting their research with "a specific electronic research resource/computer database" and that this pattern holds across the disciplines.

What accounts for this significant difference between the two studies? Perhaps it was the way questions were worded. In the Ithaka study, it seems possible that researchers who used Google Scholar may have chosen the "general purpose web search engine" category and not the "electronic resource covering various disciplines" category, and so some Google Scholar uses may not have been counted as multidisciplinary database uses. On the other hand, perhaps it was because the Ithaka study focused on the researchers' starting point for research and not on the databases used throughout the research process, or perhaps the researchers in this study are more interdisciplinary than those in the Ithaka study.

Specific Database Use

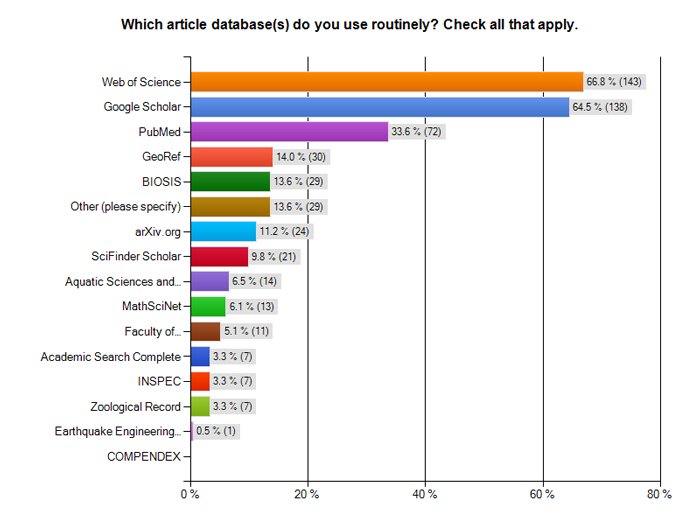

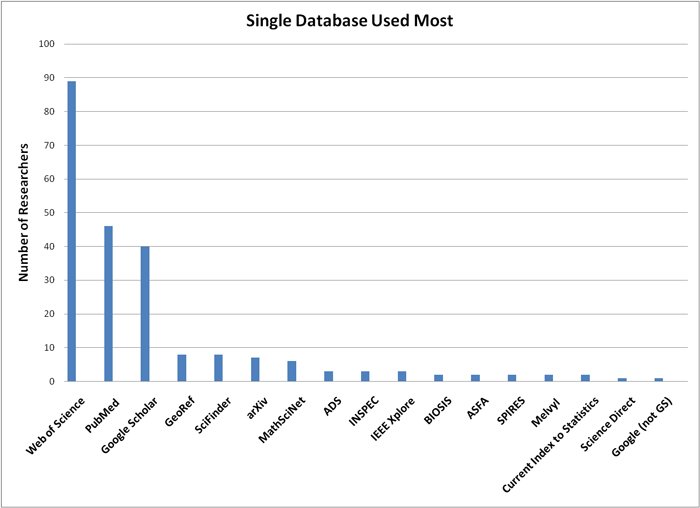

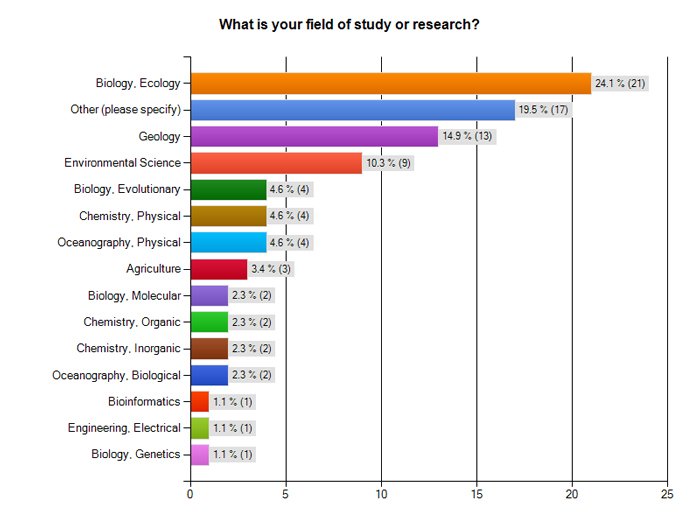

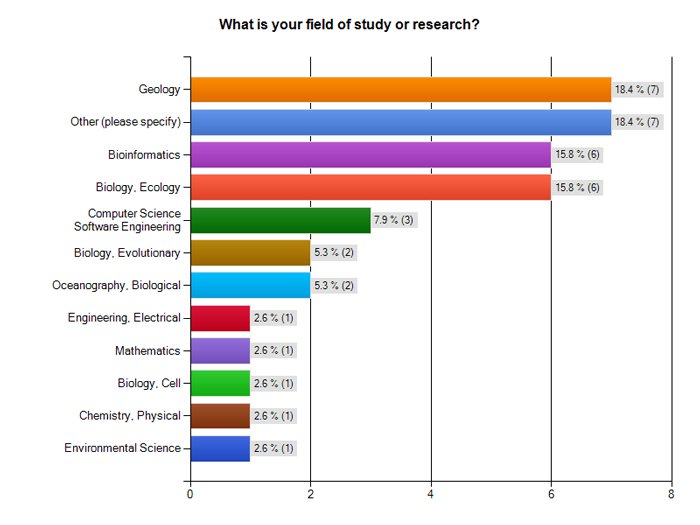

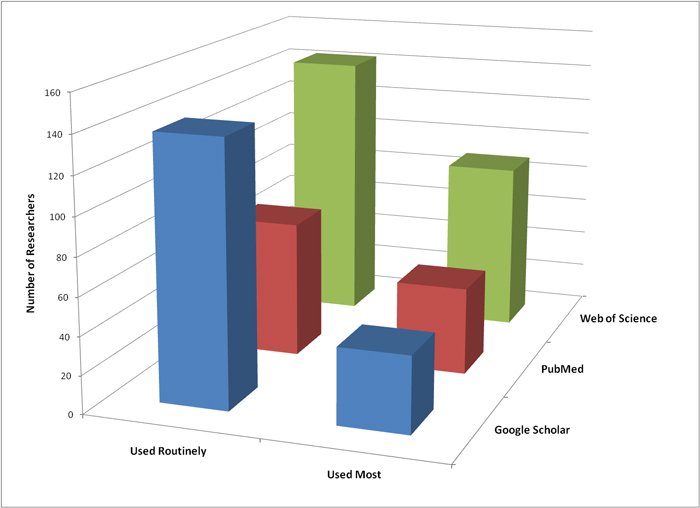

Two questions in the UCSC survey related to specific database use. One question asked which specific databases were used routinely and encouraged multiple responses as applicable (see Figure 1), while the other question asked researchers to choose the single database they used the most (see Figure 2). It was expected that Google Scholar and Web of Science would both score very highly in the "used routinely" category but it was not known which would be preferred in the "used most" category.

Figure 1: Databases used routinely by all researchers in the study. In this question, multiple database selections were encouraged. The "other" category contains sixteen databases each of which received five or fewer votes.

Figure 2: Single database used most by each researcher.

As predicted, Web of Science and Google Scholar tied for first place as most often mentioned in the "used routinely" category (with 66.8% and 64.4% of researchers voting for each respectively). This tie for first place was the same for all researchers, faculty alone, or graduate students alone. PubMed (used by 33.7% of all researchers) emerged as the only subject-specific database in this group to rival the two multidisciplinary databases in popularity.

When asked to choose the single database they used the most, the number of databases receiving substantial numbers of votes narrowed significantly to just Web of Science, Google Scholar, and PubMed. Web of Science emerged as the clear front runner, selected by 89 researchers (41.6%). Given the statistical margin of error there was no difference between PubMed (21.5%) and Google Scholar (18.7%) as the second most popular single database. There was no significant difference between faculty, graduate students, or all researchers together in the ranking of the top three databases used most. Together, these three databases accounted for almost all (81.8%) of the databases used most.

Database Use by Discipline

It was hypothesized that multidisciplinary databases would be preferred over subject-specific databases because of the increasing focus on interdisciplinary research. This hypothesis was supported by the study population as a whole, and was supported by geologists, but was not supported by all biologists. The other disciplines in the study did not have large enough sample sizes to support examining them separately.

Biology: This was the largest discipline in the study group (83 participants), making biologists 37.7% of the study population as a whole. There are two major subject-specific databases in biology, PubMed and BIOSIS, as well as several other smaller or more narrowly focused databases such as Zoological Record and Aquatic Sciences and Fisheries Abstracts.

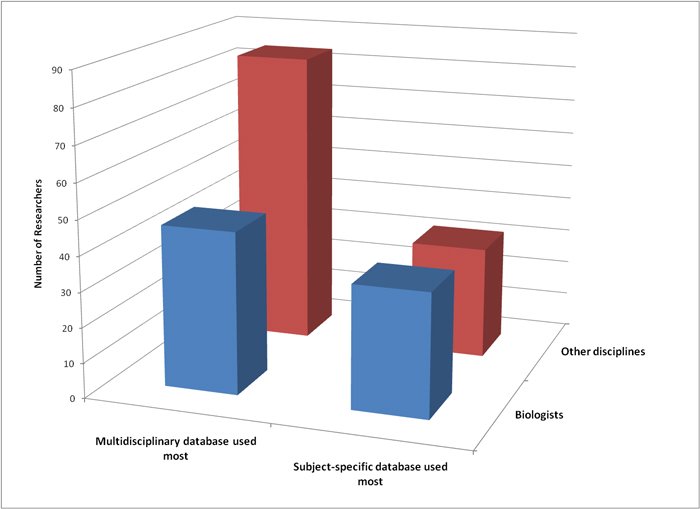

As shown in Figure 3, biologists favored subject-specific databases about equally as much as multidisciplinary ones, which is a statistically higher proportional preference for subject-specific databases than in the rest of the study population.

Figure 3: Preference for type of article database used most comparing biologists with all other disciplines. There is a statistical relationship between discipline and type of database preferred. p = 0.023.

Biologists' higher preference for subject-specific databases was due to the popularity of PubMed. When asked to choose the one database they used most, the biologists in the study chose PubMed (38.6%) and Web of Science (35.2%) about equally as their single most used database, with Google Scholar coming in second place (18.2%).

When biology is further subdivided into just the sub-discipline of ecology, the preference for subject-specific databases no longer holds true. BIOSIS is the major subject-specific database covering ecology. When ecologists were asked to choose the one database they used the most, 21 (75%) said they used Web of Science the most, six used Google Scholar the most, and only one used BIOSIS the most. Among those surveyed, the major subject-specific database in the field (BIOSIS) was used as a secondary source much more often than a primary one, and instead multidisciplinary databases were primary. This result reveals the need for finely tuned studies at the appropriate sub-discipline level.

Geology: Geologists were the second largest group in the study, with 24 study participants. GeoRef is the most comprehensive subject-specific database in this field.

Geologists supported the hypothesis that multidisciplinary databases would be preferred. Seventeen of the geologists responding (70.8%) listed GeoRef as one of the databases they used routinely, among others. However, only four geologists (16.6%) listed GeoRef as the one database they use the most. The majority of geologists (83%) said they use multidisciplinary databases more than subject-specific ones, and when asked which single database they used most, 54% chose Web of Science. One geologist said he used Web of Science "every day, many times a day." Google Scholar was the second most used single database (29%).

One researcher who said he used Web of Science most said he used GeoRef "for older literature in professional publications (i.e., not journals)." Another who said she used Google Scholar most said, "However, GeoRef is better for finding more obscure articles that may not be on the web." Despite those testimonials in support of GeoRef, statistically geologists were no different than the entire study population in favoring multidisciplinary databases over subject-specific ones. GeoRef is used, but Web of Science and Google Scholar are more often cited as the one database geologists use most.

Web of Science and Google Scholar Use by Discipline: 60.3% of the researchers indicated that they relied most heavily on either Web of Science or Google Scholar and not on a subject-specific database, and these researchers came from almost every science discipline, as illustrated in Figure 4 and 5.

Figure 4: Top 15 disciplines of the researchers who listed Web of Science as the single database they used the most. N=89.

Figure 5: Disciplines of researchers who listed Google Scholar as the single database they used the most. N=40.

"Everyone Uses Google Scholar"

Librarians and faculty alike often assert that "all researchers use Google Scholar." Based on this study, this is essentially correct. 83% of researchers had used Google Scholar and an additional 13% had not used it but would like to try it. Of those who had used Google Scholar, almost three quarters of them (73%) found it useful. Only 3.8% of the researchers surveyed had not used Google Scholar yet and had no plans to try it.

Comparing the databases researchers used regularly with the single database they used most, it's apparent that a large proportion of the study's Google Scholar users are using it as a secondary source rather than as their primary one. 138 researchers said they use Google Scholar routinely along with other databases, but only 40 said they use Google Scholar as the single database they use most. This is in contrast to PubMed and Web of Science, each of which retained a larger proportion of their users in the "database I use most" group (see Figure 6).

Figure 6: The top three most highly used databases comparing how many researchers use them routinely along with other databases, and how many researchers use them as their single most used source. There is a statistical relationship between type of use and which database is used. p = 0.001.

Researchers were not directly asked about using Google Scholar as an auxiliary database, however some quotes from the essay questions may shed some light on this topic:

"I generally use Google Scholar to find articles on particular subjects (mostly for preliminary research) and then Web of Science once I have a better idea of who the most important authors researching a topic are."

"If I already know the article I am looking for (title, author, year, etc) I find Google Scholar to be the best at quickly giving me access to the article. However, if I am searching a subject or the latest findings in a certain field, Web of Science is superior."

Understanding the Factors Contributing to Preference for Google Scholar or Web of Science

As noted earlier, 83% of the survey population had used Google Scholar and a majority had found it useful. But when asked to choose between Web of Science and Google Scholar, 47.5% said they preferred Web of Science and only 31.1% preferred Google Scholar (18.1% said they had no preference between the two, and 3.3% hadn't used at least one of them). Given the margin of error, this is either an even split between preference of Web of Science or Google Scholar, or a very slim advantage for Web of Science. This raises the question: what affects preference for one of these databases over the other?

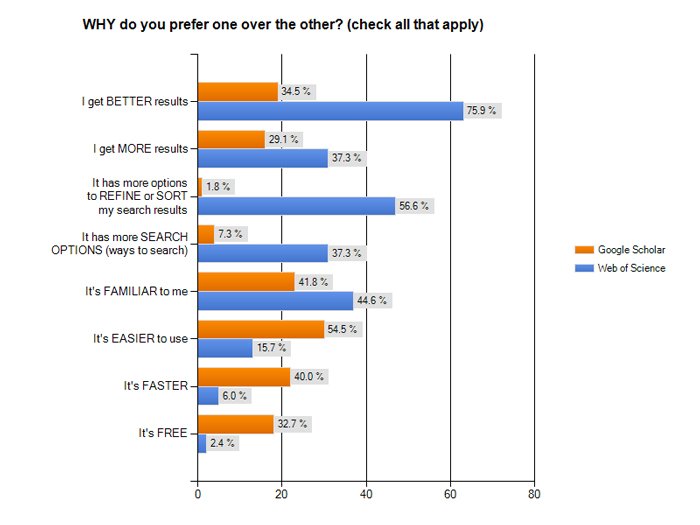

Quality & Precision versus Ease & Speed

One hypothesis is that researchers who preferred Web of Science did so for the quality and precision of the search results, while those who preferred Google Scholar were willing to sacrifice some quality for ease and speed. To test these assumptions, researchers were asked to choose from a list of options to describe why they preferred one database over the other (See Figure 7).

Figure 7: Why researchers prefer Google Scholar or Web of Science. N= 55 researchers who prefer Google Scholar. N= 83 researchers who prefer Web of Science.

As predicted, Google Scholar had a larger percentage of researchers preferring it because "it's easier to use" and "it's faster." Of those who preferred Google Scholar, 54.5% said "it's easier to use," while only 15.7% of those who preferred Web of Science said the same. The difference was even more significant in terms of speed, with 40% of those who prefer Google Scholar choosing "it's faster," as opposed to only 6% of researchers who prefer Web of Science.

The hypothesis that Web of Science is preferred for quality reasons was also supported. Quality and precision were represented by three options: "I get better results," "It has more options to refine and sort my search results," and "It has more search options (ways to search)." In all three cases researchers who preferred Web of Science chose these options in a larger proportion than researchers who preferred Google Scholar. The "better results" option is notable because it is the researcher's measure of quality. 75.9% of researchers who preferred Web of Science said it was because they get "better results," compared to only 34.5% of researchers who preferred Google Scholar. Some researchers offered comments that explain this:

"The references [in Web of Science] are more accurate for export to a reference editor. Most references off Google Scholar are horribly incomplete."

"Both [databases] are good. Sometimes I find better results with [Web of Science] than [Google Scholar], but [Google Scholar] is faster, so I usually use that first."

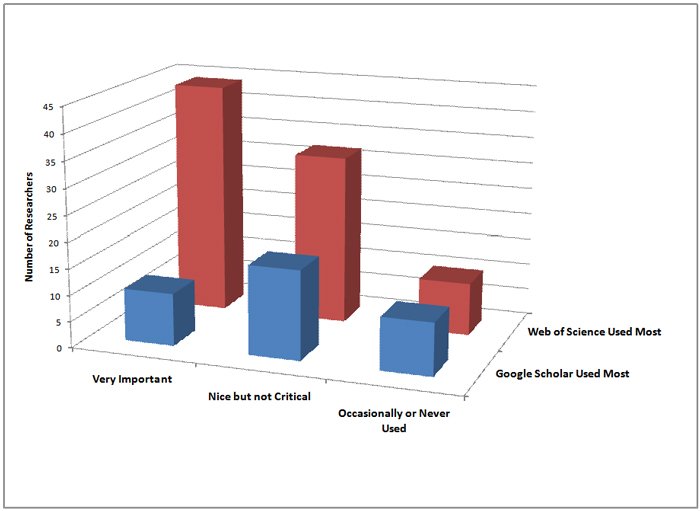

Times Cited

Being able to see the total number of citations for a particular paper is a metric used to evaluate the impact of a research paper. As such, times cited data can have repercussions for researchers' careers and reputations. It was expected that researchers would assign a high value to the times cited metric.

Both Web of Science and Google Scholar display times cited information for each article. Even though there are quality differences in how times cited information is captured between the two databases (Jascó 2009, 2010), it was hypothesized that the lower quality of the Google Scholar times cited information was not perceptible to researchers. No significant difference in the perceived value of times cited data was expected between researchers who use Web of Science most and those who use Google Scholar most.

Surprisingly, the perceived value of times cited information in general was not as high as predicted. About a third of researchers thought times cited information was "very important," but another third only ranked it "nice to have but not critical" and the remainder rarely or never used it. There was no statistical difference between graduate students and faculty in how much they valued times cited data.

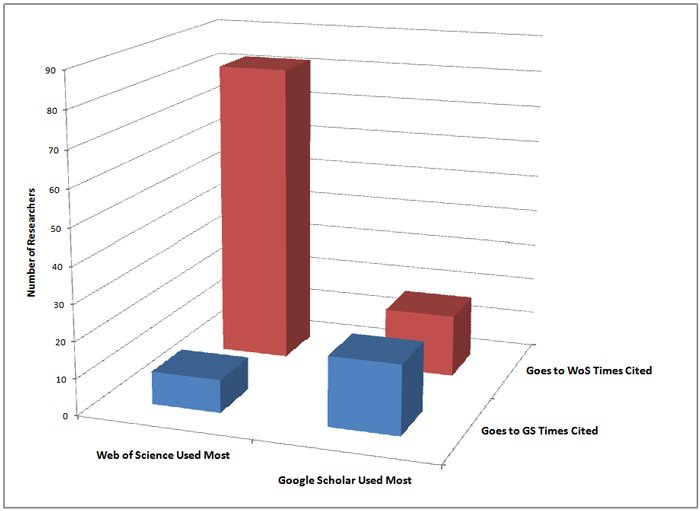

Another unexpected result was that researchers who primarily use Web of Science valued times cited data more highly than those who primarily use Google Scholar (see Figure 8) but even more interesting was the answer to the question "Where do you get your times cited information?".

Most Web of Science users stay in Web of Science, while almost half of Google Scholar users migrate to Web of Science to get their times cited information (See Figure 9). However, these associations do not prove causality. Perhaps researchers are perceiving quality differences between the two databases. Another explanation could be that Web of Science also offers the ability to sort times cited data, which makes it more useful for times cited information.

Figure 8: Value of the Times Cited feature, comparing those who use Web of Science most to those who use Google Scholar most. p = 0.005

Figure 9: Comparison of researchers' choice of primary database and where they obtain times cited data. p < 0.001

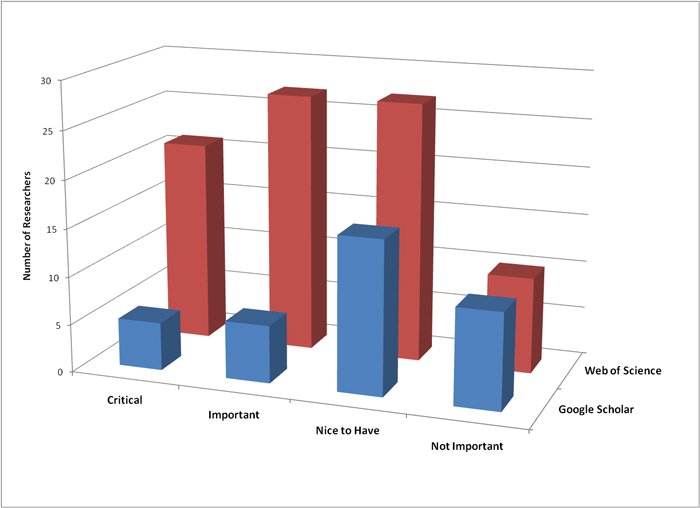

Ability to Sort by Times Cited

The ability to sort the results of a search by times cited is an option in Web of Science but not in Google Scholar. Being able to focus on the most highly cited research articles is a useful strategy to identify influential papers within a large set of results. It was expected that those who used Web of Science most would value this feature more highly than those who used Google Scholar most. As predicted, those who used Web of Science most (the database that offers the sort feature) ranked this feature "critical" "important" or "nice to have" more often than Google Scholar users (see Figure 10).

Figure 10: Value of the Sort by Times Cited feature, comparing those who use Web of Science most to those who use Google Scholar most. p = 0.038

It is possible that researchers who value the sort by times cited feature choose to use Web of Science in part because it has that feature. Another explanation could be that those who use Web of Science began to value the feature more as they became more exposed to it over time. Also it's interesting to note that although Web of Science users value this feature, they did not rank it as "critical" in large numbers.

E-mail Alerts

E-mail alerts can be a useful tool for researchers, and it was expected that they would be highly valued by science researchers. This was not the case. Whichever database they used, most researchers still said they never find articles via database alerts or don't use them. The availability of alerts does not appear to be a strong factor in database choice between Web of Science and Google Scholar. (At the time the survey was conducted, Google Scholar did not offer e-mail alerts. Google announced on June 15, 2010 that e-mail alerts are now available from Google Scholar.) The majority of researchers surveyed said they never find articles/don't use e-mail alerts from databases (61.1%) or e-mail alerts from publishers (54.0%).

Why aren't alerts more popular? Based upon the responses to one of the essay questions, "How would you prefer to discover articles in your field?" there are several possible reasons. Some researchers aren't aware that database e-mail alerts exist, even if they prefer a database that offers them (in this example, Web of Science):

"It'd be cool to be able to subscribe to keywords in combination with individual journals and then get an e-mail about it. For example if the keywords 'mars' and 'water' came up one week in the Journal of Geophysical Research (planets), I would then get an e-mail with a link to the article."

Others just can't bear to receive any additional e-mail, and don't realize that many databases offer delivery options that can help with that:

"I guess it would be useful to start getting alerts. I tend to just do searches on a topic when I need them. I actually don't like getting barraged with e-mail so I'm not happy with alerting systems, but maybe if there's a way to do digests that would help."

And some researchers are dissatisfied with the quality of the results returned from e-mail alerts:

"I suppose I could use e-mail notifications, but I often find the search criteria return too many results. This is only mildly inconvenient when I am searching for something specific, but I'm already overwhelmed by the amount of e-mail I receive, so I think I'll stick to my current method."

"I find the most useful way I am getting articles is through saved searches at PubMed. This is a very good idea in theory, any time new article is published that my search would find I am e-mailed the new listing. In practice I often find the articles are older than I expected or I am e-mailed the same finds multiple times. A refinement of this would be an excellent way to catch articles in journals that are not on my weekly browsing list."

Limit to Review Articles

The ability to limit to review articles, which is a feature in Web of Science but not in Google Scholar, was only mildly valued by those surveyed. There was also no statistical relationship between the database used most (either Web of Science or Google Scholar) and how much the ability to limit to review articles was valued. Therefore it is not a likely factor contributing to researchers' preference for Web of Science.

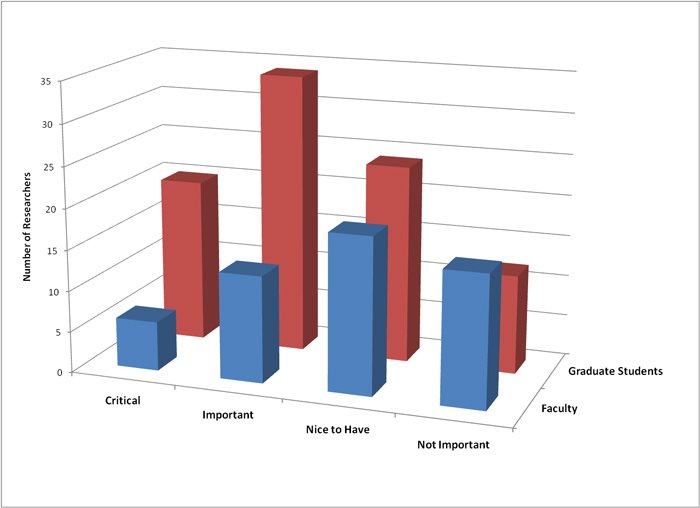

While this feature wasn't highly valued overall, graduate students did value this limit feature more than faculty (See Figure 11).

Figure 11: Value of the Limit to Review Articles feature, comparing faculty to graduate students. p = 0.021

Difficult Choices

In fiscal year 2009/2010, the University of California, Santa Cruz was forced to cut its collections budget by 20%. Because of the severity of the cut, the library had to consider canceling resources that received moderately high use since the low use titles had already been eliminated. This reality, plus the expectation that the budget may be unlikely to recover in the near future, forced a fresh look at all collection resources, but especially expensive items such as article databases.

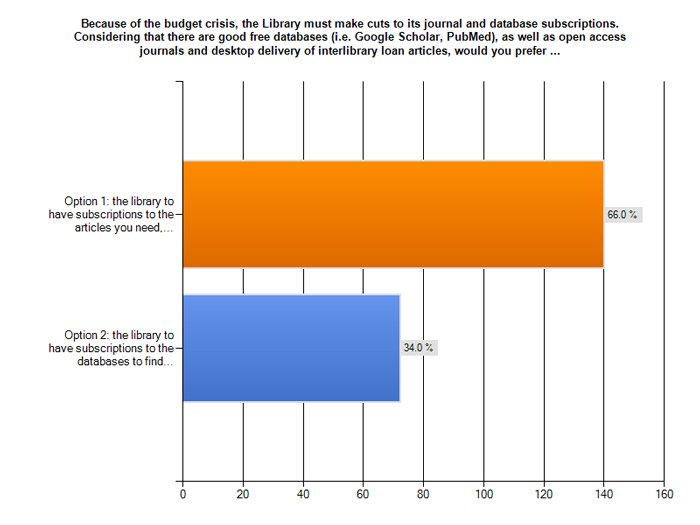

In the survey, researchers were asked to choose between two options due to the budget crisis: an environment with fewer article databases, but access to journal articles, or an environment with fewer/slower access to articles, but more article databases (see Figure 12). The survey question reminded them that there are good free databases and also free open access journals. Because journals are so important to science researchers, it was expected that the majority of researchers would choose to retain access to journal subscriptions over databases and that those who use free databases most would be even more in favor of retaining journals.

Figure 12: Proportion of science researchers choosing to save article databases or to save journal subscriptions.

These predictions were correct. Overall, 66% of researchers chose to keep journal subscriptions, while 34% chose to keep article databases. The percentage of those who used free databases most (Google Scholar, PubMed, Spires, ADS, or arXiv) and that wanted to keep journals was even higher. 87% of these researchers favored keeping journal subscriptions over article database access.

Adding further support to the hypothesis that researchers favor paying for journals over paying for databases, the reverse was not true. Of those who use fee-based article databases the most, about half of them (45.7%) did not favor spending library money on article databases at the expense of journal access. Perhaps this means that researchers who rely on fee-based article databases are aware of, and ready to switch to, the alternative free article databases.

Conclusions

Article databases remain important to science researchers. Not all traditional fee-based databases (e.g., Web of Science) and not all subject-specific article databases (e.g., PubMed), are in a "death spiral." While it is not necessary for an academic library to subscribe to 20-30 science article databases when money is scarce, it is also not yet the case that a single multidisciplinary database (e.g., Google Scholar or Web of Science) will suffice. Since only three databases accounted for nearly all of the databases used most in this study, and since a majority of the researchers surveyed supported paying for journal subscriptions over paying for article database access, the number of databases that are absolutely necessary if catastrophic budget conditions force librarians to cut deeply appears to be smaller than librarians once thought.

Google Scholar, while very popular, is used as a secondary database more often than as a primary one. Researchers value the ease and speed of Google Scholar, but may also perceive its quality and precision limitations. Google Scholar is a significant database in science and should not be discounted, but it is not likely to replace Web of Science in either the researcher or the librarian's estimation until its quality improves.

Wagner (2009) predicted that declining library budgets, continued growth of free finding aids, and increasingly seamless full-text access to articles will force libraries to cancel most article databases, with niche databases first on the chopping block. Without a doubt, article databases should not be sacred; they should be folded into a serials review process and considered for cancellation along with journal subscriptions. Researchers have primary and secondary databases. When these databases charge a fee, in good budget times libraries can subscribe to both; but in bad budget times it may be that only primary databases can be supported. The survey results lend support to Wagner's assessment about the future of niche or secondary databases but a nuanced approach will be needed to distinguish the primary from the secondary since there seems to be differences not only in how disciplines but also sub-disciplines use subject-specific and multidisciplinary databases. On the UCSC campus, most secondary article databases will be carefully scrutinized for cancellation.

This research was a snapshot in time. The title of this paper is Shifting Sands because the article database landscape is changing. Google Scholar is free now; will it continue to be free? ArXiv was free in 2009, but starting in 2010 the publisher asked institutional users for a voluntary fee. In this fast changing environment the best advice is to be in close contact with your researchers as they change their information seeking behaviors in response to the changes in the discovery tools available to them.

Areas for Further Research

It would be useful to further explore the database preferences of additional individual science disciplines and sub-disciplines using large enough sample sizes from which to draw statistically valid conclusions. Which disciplines besides biology still rely on the major subject-specific database in their field as the single database their researchers use most? Is there an effective method to distinguish primary from secondary databases that is more accurate than examining use data provided by database vendors, yet not as invasive as a survey, which could be used more routinely? In other words, can realistic yet effective best practices for ongoing article database assessment be developed? Another fruitful avenue for further research may be detailing the reasons many researchers use Google Scholar as a secondary database more often than a primary one. Finally, in this rapidly changing environment it would be useful to repeat selected portions of this study periodically to monitor trends such as the preference for multidisciplinary databases over subject-specific ones, and the popularity and quality of Google Scholar.

Acknowledgements

The authors are grateful to UCSC Assistant Professor of Applied Mathematics Abel Rodriguez for his guidance with the statistical analysis of the data, and for his generosity with his time. The support of UCSC Professor of Molecular, Cell, and Developmental Biology William T. Sullivan is also appreciated.

References

CIBER Research Group. 2009. The Economic Downturn and Libraries: Survey Findings. [Internet]. [Cited June 1, 2010]; Available from: http://site.ebrary.com/lib/librarycenter/docDetail.action?docID=80000784

Jascó, P. 2009. Google Scholar's ghost authors. Library Journal 134(18):26-27.

Jascó, P. 2010. Metadata mega mess in Google Scholar. Online Information Review 34(1):175-191.

Schonfeld, R.C., Housewright R. 2010. Faculty Survey 2009: Key Strategic Insights for Libraries, Publishers, and Societies [Internet]. [Cited June 1, 2010]; Ithaka S+R. Available from: {https://cyber.law.harvard.edu/communia2010/sites/communia2010/images/Faculty_Study_2009.pdf}

Tucci V. 2010. Are A&I services in a death spiral? Issues in Science and Technology Librarianship [Internet]. [Cited June 1, 2010]; 61. Available from: http://www.istl.org/10-spring/viewpoint.html

Wagner, A.B. 2009. A&I, full text, and open access: prophecy from the trenches. Learned Publishing 22(1):73-74.

Wagner, A.B. 2010. April 19, 1:45 pm. Voluntary support for arXiv. In: PAMNET [discussion list on the Internet]. [Alexandria (VA): Special Libraries Association, Physics Astronomy and Mathematics Division]; [Cited June 1, 2010]. [about 1p.]. Available from: PAMNET@LISTSERV.ND.EDU Archives available from: http://listserv.nd.edu/archives/pamnet.html

Appendix

The Article Database Usage Survey (PDF).

| Previous | Contents | Next |