Metrics to Measure Open Geospatial Data Quality

Assistant Professor

School of Library and Information Science

Indiana University

Indianapolis, Indiana

xiaji@iupui.edu

Abstract

This paper explores measurement standards on the quality of open geospatial data with the purpose of optimizing data curation and enhancing information systems in support of scientific research and related activities. A set of dimensions for data quality measurement is proposed in order to develop appropriate metrics.

Introduction

Metrics have been developed to measure open data in various fields, e.g., scientific and business data, as well as in various stages and purposes of development, e.g., sustainability of open data initiatives, performance of open data and governance of data quality. It has been widely accepted that standardization is critical to the process of building any healthy information system and dataset, particular at an early stage of the process. Because of the relatively short history of the open data movement, common practices will help ensure efficient and effective communications among data providers, managers, analysts, service providers and a broad array of users, as well as between dissimilar technological structures.

In order to facilitate the measurement, definitions have been provided for different categories of metrics, and various measurement techniques have been created to serve varying purposes, types and degrees of measures. Such techniques range from quantitative evaluation to qualitative assessment. Among other techniques, for example, a rating scale can be classified at an aggregate level that is supported by the details of an assessment, and a set of schemas can represent an approved model.

Because data vary significantly in nature and amount among scientific disciplines, measures can be taken in a unique way under particular circumstances. Consequently, metrics can have distinctive looks and exclusive functions. There are few prior studies available devoted to the development of workable metrics for open geospatial data, whether concerning creation, acquisition, retrieval and use of the data or data curation and management, either technically or politically. However, geospatial data are by their nature characterized by space and location with the combination of maps and coordinates, which differs radically from other types of data. These inseparable features make data openness in geoscience an additional challenge to everyone involved.

This paper will explore the standards of measurement on the quality assessment of open geospatial data with the purpose of improving information infrastructure in support of scientific inquiries. It will examine some of the existing metrics created for the measurement of geospatial and other types of data, based on which new content and structure have been developed to adjust to the unique features of open geospatial data creation, acquisition, management and use. A well-defined standard on data quality may be applicable to the measurement of other stages and types of open data development, although minor variations may exist.

Background

Research in Data Quality Assessment

The importance of maintaining high quality data to support business and scientific conduct has been extensively recognized (e.g., Gray 2010; Loshin 2006). Using metrics to test data quality has become a regular practice in many academic and professional fields. This is especially true in the field of business, where data metrics have been developed into analytical tools as an integral part of case management applications.1 Data quality measures are either taken on an ad hoc basis to solve individual business problems, or designed to use fundamental principles to help advance useable data quality metrics (Huang et al. 1998; Laudon 1986; Palmer 2002; Pipino et al. 2002). Various software applications have been created to automate the evaluation process. Outside the business field, metrics have also been widely applied to quantify data performance and research outcomes. An example is a series of assessment on RAE ratings and academic selectivity in the United Kingdom, which calculate academic excellence by incorporating such indexes as degree granting, fund receiving and article publishing into predesigned formulas as performance metrics (Harley 2000; McKay 2003; Taylor 1995). The assessment of quantitative data is playing a major role in decision making about data and research quality.

Not only is quantitative analysis valuable to the examination of data quality, but alternative perspectives are also proven helpful in like assessments (Ballou & Pazer 1985; Redman 1996; Wand & Wang 1996; Wang 1998). A multi-dimensional approach to data quality measurement highlights diverse practices, of which a subjective perception underscores the necessity of requiring personal judgments of stakeholders based on their experience and needs on data. Questionnaires may be used as the measurement instruments so that individuals' opinions can become the sources of evidence for decision making. As a result, RAE ratings can also be conducted by consulting the results of peer review. To some extent, the "operation of peer review is at the heart of RAE, and responsible for the positives and negatives it produces" (McKay 2003, p. 446); or, at least subjective-based assessment can be a ready supplement to quantitative metrics.

The diversification of data quality measurement is also demonstrated in various data media and data gathering techniques. The latter concerns the method by which data are acquired and whether the acquisition process generates qualified data, e.g., sensed data acquired using sensors may be subject to complicated communication distractions (Lindsey et al. 2002). The former refers to testing data quality in different formats of data, e.g., image data (Brown et al. 2000; Domeniconi et al. 2007). Today, DNA data have provided essential materials for biomedical investigations, and verification of such materials, many of which are imaged at multiple degrees of wavelength, is subject to considerable uncertainties due possibly to unexpected fluorescent effects. Further, the verification mechanism can be complicated by including 2- and 3-dimensional visual representations of the images.

In addition to the general studies of data quality measurement, many projects have been conducted on the topic of data quality assessment in an open access environment (e.g., Kerrien et al. 2006). Distantly related studies are found in the evaluation of the use of open access articles that examine the impact of open access on counting of citation numbers (Evans & Reimer 2009; Harnad 2002; Xia et al. 2011). However, even though open access metrics has found its niche in the analysis of publication performance, it can hardly extend the application to open data, unless the output of the data-analyses is included in the category of open data as proposed by Brody et al. (2007). These authors also argue that open data can be quantified metrically through weighing their download and citation popularity, which indirectly present usage statistics.

Dimensions and Definitions of Data Quality Metrics

The purposes of data quality metrics are multi-faceted. Simply speaking, they can (a) set information quality objectives for data creators and managers to achieve, (b) establish standards for data to be produced, acquired and curated, and (c) provide measurement techniques for quality to be judged. Such metrics consist of rules for defining the thresholds of meeting appropriate professional expectations and governing the measurement of the aspects and levels of data quality. In order to configure and organize the rules, an underlying structure is required to specify the transformation process from data quality expectations to a group of workable assertions and to prevent unprofessional conduct.

Defining dimensions of data quality metrics can meet these goals. The dimensions are typically classified according to accepted standard of scholarly activities within an academic discipline as well as other related disciplines that use the data. Researchers have developed various sets of data quality dimensions (e.g., Loshin 2006; MIKE2.0, 2011). The dimension categories are different from each other, either in compliance with the academic field(s) within which data are regulated or by the understanding and preference of individual researchers. Table 1 has the example of two dimensions from former studies for the purpose of comparison, where the first set of dimensions has its own categories of accessibility and validity, while the second set has timeliness, currency, conformance and uniqueness that the first set does not propose.

Dimension 1 |

Descriptions |

Dimension 2 |

||

Accuracy |

Referring to the level that data is accurately represented |

Accuracy |

||

Consistency |

Referring to single representation of data |

Consistency |

||

Completeness |

Referring to missing key information |

Completeness |

||

Integrity |

Referring to data relations when a broken link is presented |

Referential Integrity |

||

Accessibility |

Referring to data accessibility & understandability |

Referring to data availability on time |

Timeliness |

|

Validity |

Referring to data format & valid values |

Referring to degree of data current with the world |

Currency |

|

Referring to data consistent with domain values |

Conformance |

|||

|

|

|

Referring to relations of data & applications |

Uniqueness |

Table 1. Comparison of two data quality dimensions

(Sources: Dimension 1 - MIKE2.0, 2011, Dimension 2 - Loshin 2006)

Not only are data metrics dimensions categorized differently among fields or scholars, but their definitions also vary according mostly to different types of data. Table 1 provides the simplest form of definitions for the dimensions listed. In practice, variations exist, e.g., integrity may be described in a dissimilar way with adjusted strategies of measurement, and accuracy may be calculated at different levels of explanation.

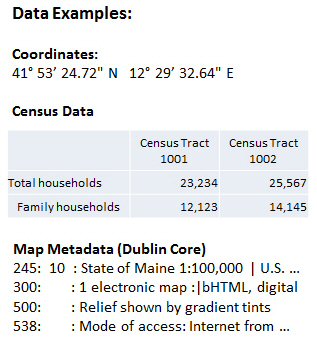

Geospatial Data

The synonyms of geospatial data include geo data, geographic data, spatial data, or GIS data. In the simplest definition, geospatial data refers to data that contain spatial elements with location characteristics (USGS 2009). The definition can be expanded to include the spatial, temporal, and thematic aspects of data that permit pinpointing any entity in space, time and topic. Therefore, thematic data with non-geometric features are also considered part of geospatial data so long as they can be linked to spatial base data and useful for spatial analysis. The following types represent the major categories of geospatial data, which are also illustrated visually in Figure 1.

- Maps, including scanned images, digitized maps, satellite images, etc. Digital maps for preservation, display and analysis in GIS (Geographic Information System), have two basic types, namely, vector maps and raster maps.

- Coordinates, normally using Eastings and Northings or Latitudes and Longitudes (i.e., x and y coordinates), and some other coordinate systems (e.g., z coordinates), to uniquely determine the position of a point or other geometric element.

- Attributes, relating to coordinates and helping analyze thematic features and displaying the analytical results either into statistical presentations (e.g., charts and tables) or map visualizations. Zip codes, addresses, population census, etc. fall in this category.

- Metadata, data about data, mainly for describing electronic maps in the geospatial data system with the Dublin Core standard and many other standards.

Figure 1. An illustration of geospatial data

Some of the individual data types and the combination of all are unique to geoscience and related spatial analysis. In order to deal with the special features of geospatial data, the Open Geospatial Consortium (OGC)2 and the International Organization for Standardization (ISO)3 have demarcated some technical standards for data dissemination and processing. ISO identifies several data models and operators including spatial schema, temporal schema, spatial operators and rule for application schema in order to facilitate data interoperability. It uses structured set of standards to specify methods, instruments and services for the management of geospatial data by focusing on data definition, accessibility, presentation and transfer.

"In general, these standards describe communication protocols between data servers, servers that provide spatial services, and client software, which request and display spatial data. In addition, they define a format for the transmission of spatial data" (Steiniger & Hunter n.d., p. 3).

Both OGC and ISO draw a large picture of geospatial data management and discuss the standards within a context of geographic service providing and application implementation. Yet, although the significance of accommodating geospatial data in a geographic information infrastructure has been obvious, it is beneficial to focus on the exploration of data quality in order to develop metrics. Furthermore, the focus needs to be set on exploring how a metrics will better serve the needs of data quality measurement when open access is the theme of discussion and open geospatial data come into play.

Open Geospatial Data Quality: A Metrical Approach

Dimensions and Definitions

Let us start with the dimensions and definitions of open data quality metrics. A senior staff writer for the OGC, Jonathan Gray (2010) calls for an OGC consensus process and consensus-derived geo-processing interoperability standards. He is particularly enthusiastic about the open access possibilities in geospatial data. Listed below, his 17 Reasons why scientific geospatial data should be published online using OGC standard interfaces and ISO standard metadata provide an opportunity to consider possible dimensions for the metrics of open geospatial data quality.

Dimension Category |

Description |

Data transparency |

In the domain of data collection methods, data semantics, & processing methods |

Verifiability |

Validating the relationship between data & research conclusions |

Useful unification of observations |

Characterizing data acquisition mechanisms |

Data sharing & cross-disciplinary studies |

Data documentation |

Longitudinal studies |

Long-term consistency of data representation through data curation |

Re-use |

Re-purposing data in an open access environment |

Planning |

Open data policies for data collection & publishing |

Return to investment |

An open access effect |

Due diligence |

Open data policies for research funding institutions to perform due diligence |

Maximizing value |

Data usage promoting data value |

Data discoverability |

Data retrieval becoming easier with the support of standard metadata |

Data exploration |

Frequency & speed of data access |

Data fusion |

Open data enhancing data communications |

Service chaining |

Open data refining chaining of web services for data reduction, analysis & modeling |

Pace of science |

Open data speeding scientific discovery |

Citizen science & PR |

Bridging science & the public, encouraging involvement of amateurs in research |

Forward compatibility |

Open science supporting data storage, format & transmission technologies |

Table 2. Gray's 17 reasons why geospatial data should be open and standard (Source: Gray 2010)

After reading this list, it becomes apparent that Gray's attention is more on data openness than data quality. Reasons such as return to investment and citizen science & PR refer to open data usage, and service chaining and pace of science emphasize the role of openness in a data system. On the other hand, some of the reasons do provide additional thoughts to modify the categories of metrics dimensions listed in Table 1 and are applicable to the quality measurement of open geospatial data which entail two inseparable components: openness and spatial character. The following list of categories is selected and defined to meet the specific requirements.

| Accuracy: | Accuracy is critical to geospatial data quality because location information is represented. Among other issues, the diverse methods of map projection distort the surface in some fashion. Georeferencing helps align spatial entities to an image, which requires accurate transformation of data. |

| Accessibility: | When open access is taken into consideration, data accessibility and understandability have a new meaning. For geospatial data, this can also mean an evaluation on the sources of availability, e.g., satellite imagery from government agencies like NASA versus from commercial companies. |

| Authority: | Sources of geospatial data indicate quality. Authoritative sources can come from research institutions and the government. Examples include population counts, census tracts, and satellite imagery provided by the government. |

| Compatibility: | Newer devices can retrieve, read, understand and interpret the input generate for older devices; or vice versa. Data also need to be compatible between different technological systems. |

| Completeness: | Key data fields and the other types of data supporting spatial analysis and presentation should be associated to ensure usability and appropriateness of data values. |

| Consistency: | Data values from various sources referring to the same geospatial feature need to be consistent. A geospatial database may contain all available map scales and projections. Preserving some properties of the sphere-like body should not be taken at the expense of other properties. |

| Currency: | To make sure that changes to geospatial data are updated, both on maps and in text. For example, even personally used GPS devices need frequent updates for current road conditions. |

| Discoverability: | Also known as interoperability, to support metadata harvesting. Metadata standards and quality of metadata mapping are the keys to maintain effective communications over the internet. Controlled vocabularies will enhance data communications. |

| Integrity: | It is necessary that data have a complete or whole structure with rules to relate data. The relationship of data with broken links is an issue for online identification of data management. |

| Legibility: | Both machines and humans should be able to catch, read, interpret and use available data. An example is the recognition of satellite imagery where resolution variations can reflect the effective bit-depth of the sensor, the optical and systemic noise, the altitude of the satellite's orbit, etc. Resolution can have the spatial, spectral, temporal, and radiometric types. |

| Repurposing: | This is more an open access issue. However, reusability helps verify the quality of geospatial data. |

| Transparency: | Transparency in the process of data creation and acquisition can help confirm high standards in data. |

| Validity: | The correctness of data format and values ensures is consisted of part of the criteria for data quality measures. |

| Verifiability: | High quality data promise best research results, assuming that research designs are in professional standards. |

| Visualization: | The presentation characters of maps may be quantified by their color, symbols, format and index. |

Measurement

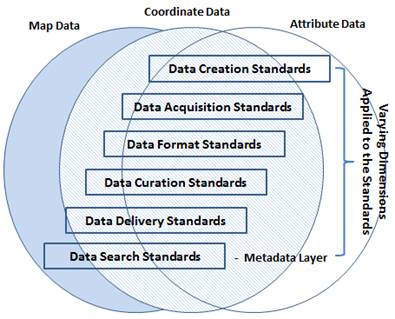

OGC has identified several data quality standards, namely, data format standards, data delivery standards and data search standards, which serve as good start to formulate workable metrics in the assessment of open geospatial data quality. The conceptual model in Figure 2 demonstrates key standards involved in the measurement and relationships among various elements in the process. A little more explanation will help understand the relationships.

The basic data types include maps, coordinates and attributes which are considered to be typical geospatial data. Metadata, while as an independent data type itself, describe all other types of data and play an important role in measuring data deliverability as regulated in data search standards on Figure 2 diagram. This measure of data deliverability is prioritized high when open data are in place because it certifies interoperability across web domains. Other standards identified apply to all three data types. These standards, alongside data search standards, measure the major stages of data development and access, i.e., from the stages of data creation to data acquisition, management and retrieval. In this proposed process, data format standards stress the implementation of markup languages, data curation standards underlines the application of data transformation (e.g., upgrading file format), and data delivery standards highlights web mapping service.

Data quality dimensions, as shown on the diagram, apply to every set of the standards. However, because of the differences in targeted measures, not all dimension categories are applicable to every standards. It will be not surprising if accessibility is not incorporated into the measure of data acquisition standards, although it is critical to evaluate data with the data delivery standards. On the other hand, some dimension categories are valid for several standards, e.g., legibility can be used for data creation standards, data acquisition standards and data format standards, and consistency can be found in the application of multiple standards.

Figure 2. A conceptual model of quality metrics for open geospatial data

Measurement can be performed using various techniques. Like business having its developed software tools to manage data quality, geoscience has a sophisticated system that uses GIS technologies to provide qualified spatial data, georeference imagery sources for the accuracy of map presentation, test data compatibility, and so forth. Digital maps in the form of vector and raster can be verified, preserved and analyzed by all GIS applications, although ESRI's products, e.g., ArcGIS, have dominated the practice. Most of such tools have an enhanced ability to process satellite and similar types of imagery, which can also be handled by remote sensing devices.

With regard to open data, there is a large group of GIS web services that comply with established data standards to facilitate data communications across domains on the internet. A recent introduction of Spatial Data Infrastructures (SDI) has integrated the functionality of GIS systems (both server and desktop applications), web map services, and spatial database management systems to standardize data administration. With the support of open source solutions, free geospatial data management tools allow "the distribution of a proven SDI architecture within legal constraints… and support a wide range of industry standards that ease interoperability between SDI components" (Steiniger & Hunter n.d., p. 1).

In a standard spatial database management system, data filing using a group of algorithms to statistically analyze and appraise the quality of data values within a data set. It is also a function that examines relationships between value collections within and across geospatial data sets. With the purpose of maintaining data quality, date filing serves an effective metrics to measure data integrity, consistency and validity.

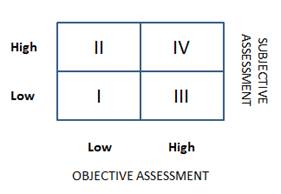

Geospatial data metrics can be the combination of objective and subjective assessments. In addition to quantitative measurement, qualitative efforts are also playing an important role in the assessment process. Pipino et al. (2002) proposes a quadrant scheme to accommodate the analysis of objective and subjective assessments of a specific dimension in order to help qualify data. In this structure, the outcome of an analysis is proposed to fall into one of the four quadrants where Quadrant IV contains quality data state, while the outcomes of the analysis in Quadrants I, II or III require further investigations for root causes and corrective actions (Figure 3). This method of joint objective-subjective measures has been tested again business data from some companies, and may possibly apply to open geospatial data. At minimum, this concept will inspire the development of multi-level and multi-tasked metrics for measuring the quality of open geospatial data.

Figure 3. A dual objective-subjective measurement model (modified from Pipino, et al. 2002, Figure 3)

Comparing to Some Existing Standards

In order to assess the unique contributions of the proposed metrics, it is useful to compare them to existing standards. We select several popularly implemented standards for the general assessment of geospatial data quality, i.e., the US Federal Geographic Data Committee (FGDC) data quality information and the Spatial Data Transfer Standard (SDTS).4 In compliance with the Federal Information Processing Standard, FGDC and SDTS are designed to promote the potential for increased access to and sharing of spatial data, the reduction of information loss in data exchange, the elimination of the duplication of data acquisition, and the increase in the quality and integrity of geospatial data.

FGDC has several concentrations: accuracy, consistency, completeness and metadata correctness (see the list below), while SDTS provides a robust system for transferring position-related data between different technological systems to reduce information loss. SDTS has a set of standards for transferring such data as spatial data, attributes, geo-referencing, quality reports, data dictionaries as well as necessary metadata elements. In a sense, the metrics proposed in this paper serve as a conceptual framework guiding the measurement of spatial data quality control, accessibility and usability. Yet, both FGDC and SDTS serve as application tools used for the actual measures and implementations. It is important for the metrics to cover as many areas and aspects of data measurement as possible, while implementations need to focus on one or several areas to ensure the completeness and smoothness.

FGDC data quality information:- Attribute_Accuracy

- Attribute Accuracy Report

- Quantitative_Attribute_Accuracy_Assessment

- Logical Consistency Report

- Completeness Report

- Positional_Accuracy

- Horizontal_Positional_Accuracy

- Quantitative_Horizontal_Positional_Accuracy_Assessment

- Vertical_Positional_Accuracy

- Quantitative_Vertical_Positional_Accuracy_Assessment

- Lineage

- Source_Information

- Source_Citation

- Source_Time_Period_of_Content

- Process_Step

- Process_Contact

- Cloud Cover

Conclusion

Because of the uniqueness and complexity of geospatial data, quality control is always a challenge to data providers, managers, analysts and data service providers. Metrics developed to measure data quality need to reflect the nature of the data, and therefore must be diversely structured to handle maps, coordinates, attributes and other types of geospatial data. A list of dimensions with clear and accurate definitions will provide necessary standards for the measurement. When the practice of open access is also considered, several more layers of complexity are added and additional tasks are created to solve issues pertaining to web communication, data usability, data integrity and related issues.

Both quantitative metrics based on objective measurement and qualitative metrics based on subjective measurement are essential to the quality control of geospatial data. The optimal solution is to join both assessments and make any measurement a multi-level effort. A plan of monitoring data quality performance must integrate technology to human experience. Measurement needs to be paired with a process with which data quality standards are established, problems are anticipated, identified, documented, and solved, and quality control procedures are followed and adjusted in necessary. Information professionals need to work closely with all kinds of stakeholders and become fully aware of the importance of data geospatial quality in support of research and education.

Notes

1 Various data metrics applications are created as commercial products to support data verifications. Examples include Analysis & Data Metrics produced by Compliance Concepts, Inc. http://www.complianceconcepts.com/complianceline/analysis-data-metrics.asp, and DataMetrics by ASI DataMyte: http://www.scientific-computing.com/products/product_details.php?product_id=320.

2 According to GOC, "The Open Geospatial Consortium (OGC®) is a non-profit, international, voluntary consensus standards organization that is leading the development of standards for geospatial and location based services." See http://www.opengeospatial.org.

3 Kerherve, P. (2006). ISO 191xx series of geographic information standards, Presented at the Technical Conference on the WMO Information System, Seoul, Korea, 6-8 November 2006.

4 The US Federal Geographic Data Committee: http://www.fgdc.gov/metadata/csdgm/02.html; The Spatial Data Transfer Standard: http://mcmcweb.er.usgs.gov/sdts.

References

Ballou, D.P. & Pazer, H.L. 1985. Modeling data and process quality in multi-input, multi-output information systems. Management Science 31 (2): 150-162.

Brody, T., Carr, L., Gingras, Y., Hajjem, C., Harnad, S. & Swan, A. 2007. Incentivizing the Open Access Research Web: Publication-Archiving, Data-Archiving and Scientometrics. CTWatch Quarterly 3(3). [Internet]. [Cited 2011 Oct 3]. Available from: http://www.ctwatch.org/quarterly/articles/2007/08/incentivizing-the-open-access-research-web

Brown, C.S., Goodwin, P.C. & Sorger, P.K. 2000. Image metrics in the statistical analysis of DNA microarray data. Proceedings of the National Academy of Sciences of the United States of America 98 (16): 8944-8949.

Domeniconi, C., Gunopulos, D., Ma, S., Yan, B., Al-Razgan, M. & Papadopoulos, D. 2007. Locally adaptive metrics for clustering high dimensional data. Journal of Data Mining and Knowledge Discovery 14: 63-97.

Evans, J.A. & Reimer, J. 2009. Open access and global participation in science. Science 323 (5917): 1025.

Gray, J. 2010. Open geoprocessing standards and open geospatial data. Open Knowledge Foundation Blog [Internet]. [Cited 2011 Oct 3]. Available from: http://blog.okfn.org/2010/06/21/open-geoprocessing-standards-and-open-geospatial-data

Harley, S. 2000. Accountants divided: research selectivity and academic accounting labour in UK universities. Critical Perspectives on Accounting 11: 549-582.

Harnad, S. 2007. The open access citation advantage: quality advantage or quality bias? [Internet]. [Cited 2011 Oct 3]. Available from: http://openaccess.eprints.org/index.php?/archives/191-The-Open-Access-Citation-Advantage-Quality-Advantage-Or-Quality-Bias.html

Huang, K., Lee, Y. & Wang, R. 1999. Quality Information and Knowledge. Upper Saddle River, NJ: Prentice Hall.

Kerrien, S. et al. 2007. IntAct - Open source resources for molecular interaction data. Nucleic Acids Research 35: D561-565. [Internet]. [Cited 2011 Oct 3]. Available from: http://www.ncbi.nlm.nih.gov/pmc/articles/PMC1751531

Laudon, K.C. 1986. Data quality and due process in large interorganizational record systems. Communications of the ACM 29 (1): 4-11.

Lindsey, S., Raghavendra, C. & Sivalingam, K.M. 2002. Data gathering algorithms in sensor networks using energy metrics. IEEE Transactions on Parallel and Distributed Systems 13 (9): 924-935.

Loshin, D. 2010. Monitoring Data Quality Performance: Using Data Quality Metrics, Informatica White Paper [Internet]. [Cited 2011 Oct 3]. Available from: http://it.ojp.gov/docdownloader.aspx?ddid=999

McKay, S. 2003. Quantifying quality: Can quantitative data ("metrics") explain the 2001 RAE ratings for social policy and administration? Social Policy & Administration 37 (5): 444-467.

Palmer, J.W. 2002. Web site usability, design, and performance metrics. Information Systems Research 13 (2): 151-167.

Pipino, L.L., Lee, Y.W. & Wang, R.Y. 2002. Data quality assessment. Communications of the ACM 45 (4): 211-218.

Redman, T.C., ed. 1996. Data Quality for the Information Age. Boston, MA: Artech House.

Steiniger, S. & Hunter, A.J.S. n.d. Free and open source GIS software for building a spatial data infrastructure [Internet]. {Cited 2011 Oct 3]. Available from: http://sourceforge.net/projects/jump-pilot/files/w_other_freegis_documents/articles/sstein_hunter_fosgis4sdi_v10_final.pdf/download?use_mirror=voxel

Taylor, J. 1995. A statistical analysis of the 1992 research assessment exercise. Journal of the Royal Statistical Society Series A 158 (2): 241-261.

Wand, Y. & Wang, R.Y. 1996. Anchoring data quality dimensions in ontological foundations. Communications of the ACM 39 (11): 86-95.

Wang, R.Y. 1998. A product perspective on total data quality management. Communications of the ACM 41 (2): 58-65.

Xia, J., Myers, R.L. & Wilhiote, S.K. 2011. Multiple open access availability and citation impact. Journal of Information Science 37 (1): 19-28.

| Previous | Contents | Next |