Developing and Teaching a Two-Credit Data Management Course for Graduate Students in Climate and Space Sciences

Joanna Thielen

Research Data Librarian

Oakland University Library

Rochester, Michigan

jthielen@oakland.edu

Sara M. Samuel

Research Analyst at MForesight

University of Michigan

Ann Arbor, Michigan

henrysm@umich.edu

Jake Carlson

Research Data Services Manager

University of Michigan Library

Ann Arbor, Michigan

jakecar@umich.edu

Mark Moldwin

Professor, Space Sciences and Engineering

Climate and Space Sciences and Engineering Department

University of Michigan

Ann Arbor, Michigan

mmoldwin@umich.edu

Abstract

Engineering researchers face increasing pressure to manage, share, and preserve their data, but these subjects are not typically a part of the curricula of engineering graduate programs. To address this situation, librarians at the University of Michigan, in partnership with the Climate and Space Sciences and Engineering Department, developed a new credit-bearing course to teach graduate students the knowledge and skills they need to respond to these pressures. The course was specifically designed to teach data management through the lens of climate and space sciences by incorporating relevant examples and readings. We describe the development, implementation, and assessment of this course and provide recommendations for others interested in building their own data management courses.

Introduction

Data management is becoming increasingly important for graduate students to master. Data management plans (DMPs) are now required by all the major federal funding agencies and data are increasingly recognized as an important stand-alone product of scholarship (Borgman 2008; Hanson and van der Hilst 2014; Holdren 2016). Most researchers form their foundational research habits and workflows during graduate school by learning from their peers, advisors, and collaborators. Frugoli, Etgen, and Kuhar (2010) note that "overburdened principal investigators (PIs) may forget about, delay, miss or neglect opportunities to formally address data management issues, leaving students to develop data management techniques by observing others or completely on their own." Additionally, Federer, Lu, and Joubert (2016) found that 77% of biomedical researchers have never had formal training in data management, so any data management practices that advisors and collaborators are passing along to graduate students may not be best practices. It is important to the overall academic enterprise that graduate students are taught good data management because "graduate students are the ones on the front line of generating, processing, analyzing, and managing data" (Carlson and Stowell-Bracke 2013).

In response to federal funding agency requirements and other shifts in scholarly communication, academic libraries are playing a larger role in helping faculty and students manage, share, and preserve their research data. Some academic libraries across the United States have developed or are developing services to support researchers across the data lifecycle, including consulting on DMPs, assisting in the discovery and citation of data, facilitating the deposit of data into repositories for public sharing, and offering guidance on preserving data beyond the lifespan of the research project (Tenopir, et al. 2015). The University of Michigan Library (U-M Library) is one such library that has been active in developing research data services that are targeted to the needs of faculty and students.

Data information literacy is an area of recognized need and one that librarians are well suited to address (Federer, Lu, and Joubert 2016). Recognizing the need to offer data information literacy training for graduate students at the University of Michigan (U-M), we set out to explore possibilities for developing research data management education within the College of Engineering (CoE). Through discussions with the Associate Chair for Academic Affairs and other faculty members in the Climate and Space Sciences and Engineering (CLaSP) Department, we determined that a two-credit course would be the ideal way to offer this training. The department was in full support of librarians teaching the course, which would present best practices for data management through the lens of climate and space sciences' data culture and practices.

This article describes the development, implementation, and assessment of this new graduate-level data management course. Additionally, we discuss connections between the course and the Accreditation Board for Engineering and Technology (ABET) standards. Finally, we will present several lessons learned in hopes of inspiring other librarians to offer a data management education at their institutions.

Literature Review

Graduate students are the ideal target for data management courses for several reasons. First, they, not their PIs, are frequently the most actively involved in creating, organizing, and managing data from their research projects on a daily basis. Furthermore, many have had little or no formal training in data management so they often are learning these skills ad hoc and at the point of need (Carlson and Stowell-Bracke 2013). Consequently, graduate students tend to develop a widely different set of data management skills during their graduate studies (Johnston and Jeffryes 2014). As graduate students will become the next generation of faculty and industry researchers, it is vital to instill in them good data management practices before they graduate. In addition, helping students better understand and apply good data management practices will likely help to improve disciplinary cultures of practice in how data are managed, shared and curated.

There are several different approaches to teaching data management to graduate students. One method is to integrate it into existing courses. Frugoli, Etgen, and Kuhar (2010) briefly mention a graduate-level genetics and biochemistry course taught at Clemson University, titled "Issues in Research," in which "data management is a small subsection of the semester-long course." While useful as an introduction to the topic, this format does not allow students to delve into data management issues and discussions in any depth.

Where there is a lack of formal training opportunities, data management can be taught in a more informal setting. One of Carlson and Stowell-Bracke's recommendations calls for a small lab where graduate students can simply "devote time to discussion about data issues with graduate students" (2013). Whether formal or informal, time is needed for in-depth discussions around data management, especially for graduate students who are still learning their trade.

Formal courses and training sessions on data management have also been offered to graduate students. Whitmire (2015) successfully offered a two-credit data management course to a broad audience of graduate students and the assessments showed that while the students appreciated the course, a few recommended offering a more discipline-specific version. Adamick, Reznik-Zellen, and Sheridan (2013) had similar findings after they taught a series of workshops around data management for graduate students in all disciplines. While the students found the workshops to be useful overviews of data management, many students attending the workshops desired more specific disciplinary examples and tools.

Within this context, our course is an example of a formal course teaching data management to graduate students through the lens of a specific discipline: climate and space sciences.

Setting

The U-M Library worked with the Climate and Space Sciences and Engineering Department (CLaSP) to develop and offer this course. In the fall of 2015, CLaSP enrolled over 100 graduate students. This department has a specific course number earmarked for graduate-level experimental courses that do not need prior approval by the department's curriculum committee. These courses are approved by the Associate Chair for Academic Affairs and are designed to encourage faculty to develop courses that address new and emerging topics.

The CLaSP department recognizes the need to provide formal training for graduate students in many of the 'hard' skills important for research success that traditionally have not been formally taught in the classroom (such as oral and written communication). The ability to understand the role of data in today's science and engineering environment brings data science and management skills to the fore. However, although faculty have years of experience working with data, most have no formal training in the breadth of data science and management skills needed today. Therefore, the department enthusiastically partnered with the U-M Library to develop a graduate-level course specifically addressing data management.

We began the process of learning the CLaSP department's data management instruction needs by visiting several faculty meetings. The result was the creation of a new credit-bearing course on data management to be taught by librarians with sponsorship from the CLaSP department. This was the first time that the U-M Library offered a credit-bearing data management course in any discipline.1 Since the U-M Library is not an academic unit and cannot offer credit-bearing courses, the instructors listed this course through the CLaSP department.

The two-credit data management course was offered as an experimental course in the Winter 2016 semester. The course was titled "Data Management and Stewardship in the Climate and Space Sciences" and was led by a team of three librarian instructors.

Developing the Course and its Content

Data Literacy Competencies and Data Information Literacy Project

The work done by the Data Information Literacy (DIL) Project helped us structure the development of the course.2 This project's goal was to better understand the expertise needed by graduate students to meet federal requirements and disciplinary expectations in managing, sharing, and curating their data. Through studying and interacting with faculty and graduate students in several science and engineering disciplines, the DIL Project identified twelve base data competencies (shown in Table 1) as areas of consideration for developing education programming in this area (Carlson, Fosmire, Miller & Nelson 2011). These competencies provided us with a starting point for the content of the new course.

Table 1. Twelve base data competencies identified by the Data Information Literacy (DIL) Project (Carlson et al. 2011). The nine competencies in bold were identified as the areas of focus for our data management course.

| Data Processing and Analysis | Data Curation and Reuse |

| Data Management and Organization | Data Conversion and Interoperability |

| Data Preservation | Data Visualization and Representation |

| Databases and Data Formats | Discovery and Acquisition |

| Ethics and Attribution | Metadata and Data Description |

| Data Quality and Documentation | Cultures of Practice |

These twelve competencies were deliberately loosely defined by the DIL Project and are intended to serve as starting points in designing educational programming rather than as directives. Instead of a 'one size fits all' approach, the competencies can accommodate the perspectives of different disciplines, the challenges presented to researchers within a discipline, possible methods for addressing these challenges, and the support, resources, and tools available to them.

Based on our knowledge of research methods and data use in the climate and space sciences, we identified nine of the twelve data competencies as being relevant to this community (shown in bold in Table 1) and within our ability to teach. They formed the basis of the data management topics discussed in the course.

In addition to the twelve competencies, the DIL Project introduced a model for developing an educational program to teach data management to students (Wright, S.J., et al. 2015). The first stage is to conduct an environmental scan of the discipline or sub-disciplines of the students targeted for the program to gain an understanding of expectations, norms and available support. Conducting an environmental scan helps the instructor frame the course to resonate with students effectively. The next stage in the model is to conduct interviews with students and faculty (and other relevant parties such as lab managers if appropriate). Interviews help instructors better understand how disciplinary principles are applied locally and to what extent. Conducting interviews also gives the instructor an understanding of the priorities of faculty and students in working with data and a better idea of what ought to be covered in the program. Finally, interviews can inform possible assessment metrics to evaluate the impact of the course

For this course, our environmental scan included reviewing the ABET accreditation standards and attending the American Geophysical Union conference as a means of informing and contextualizing content. We also interviewed faculty and graduate students to better understand and connect to actual needs, concerns, and useful resources for researchers in climate and space sciences.

Environmental Scanning: ABET Accreditation Standards

The ABET accreditation criteria for graduate level programs is an important consideration when developing a new graduate-level course in engineering (ABET 2015-2016). This course could meet several of the student outcomes in the Engineering criteria (emphasis added):

- an ability to apply knowledge of mathematics, science, and engineering

- an ability to design and conduct experiments, as well as to analyze and interpret data

- an ability to design a system, component, or process to meet desired needs within realistic constraints such as economic, environmental, social, political, ethical, health and safety, manufacturability, and sustainability

- an ability to function on multidisciplinary teams

- an ability to identify, formulate, and solve engineering problems

- an understanding of professional and ethical responsibility

- an ability to communicate effectively

- the broad education necessary to understand the impact of engineering solutions in a global, economic, environmental, and societal context

- a recognition of the need for, and an ability to engage in life-long learning

- a knowledge of contemporary issues

- an ability to use the techniques, skills, and modern engineering tools necessary for engineering practice.

First, learning how to find, manage, and share data will assist with students' ability to analyze and interpret data (b). Working with data is an essential part of the graduate program, and this course can give graduate students additional tools to succeed in working with data.

This course could also help students understand professional and ethical responsibility (f). Data are important scholarly output and resource, and it is essential that graduate students know how to use and interact with data in a professional and ethical way. Several aspects of working with data responsibly could be discussed including citing data, and intellectual property statements when sharing data.

Learning responsible data management will also advance the students' ability to communicate effectively (g). The students could learn how to speak about data through course discussions and a final presentation. Additionally, they could be introduced to the importance of documentation and practice writing documents that allow other researchers to understand and use their data without having to explain it in person. Communicating research findings through data is key to being an effective researcher and this course provides students more tools to talk about their data.

Learning about the data lifecycle and how data can be reused to further the understanding of a topic will help students understand the impact of engineering solutions in a global, economic, environmental, and societal context (h). Sharing data and communicating it effectively can have an impact on government policies and funding opportunities, which influence society nationally and globally.

And finally, the students could be given a thorough introduction to the increasing importance of data management and stewardship for scientific advancement, increasing their knowledge in this contemporary issue (j). Funding agencies are increasingly releasing data requirements, and journals are beginning to require that data be available in repositories before publication. Graduate students will benefit from understanding the current emphasis on, and policies around, data management.

Environmental Scanning: The American Geophysical Union Fall Meeting

In order to gain a better understanding of how climate and space scientists and engineers talk about their data and what data-related issues they are facing in their daily work, we attended the American Geophysical Union (AGU) Fall 2015 Meeting. The annual event is heralded as "the largest Earth and space science meeting in the world" with over 24,000 researchers, students, and vendors attending.3 Two sections in particular -- Education and Earth and Space Science Informatics -- had sessions that were especially relevant. The following were key takeaways from our conference experience:

- Scientists are actively working to figure out new ways to organize, collect, and present data. Several presentations demonstrated new tools being built to help researchers collect data in the field that facilitated automatic capture of metadata. Other presentations demonstrated tools to help the public interact with data that has been gathered by government agencies. Additional tools are being built to better publish data and help link data with publications.

- Faculty are exploring the best way to educate students on data-related issues, and are working to create more thoughtful paths to careers like "Data Scientist," either through certificate programs or specialized degree programs.

- We received positive feedback when we anecdotally discussed the class we would be teaching. Individuals often expressed their willingness to help if we needed resources or additional materials for course activities. This is a great network to build for accessing case studies and obtaining real documents like data policies at national laboratories.

Attending the event immersed us in the culture of climate and space scientists, and gave us additional context within which to teach the course.

Interviews with Faculty and Graduate Students

After identifying the nine data competencies as a starting point, the next step was to interview faculty and graduate students about their research and the current CLaSP graduate curriculum. It was vital to link the course contents to actual needs, concerns, and resources for researchers in climate and space sciences. In total, we conducted 14 in-depth interviews, typically lasting one hour. Of the graduate students and faculty interviewed, five conduct research in space science and eight conduct research in climate science. (The member of the support staff from the IT department does not conduct research.)

Interviewing faculty and students as a part of developing a data management course helps librarians better "speak the language" of the discipline and link course content to current research practices (Corti and den Eynden 2015). The interviews gave us a better understanding of the data culture within climate and space sciences, illustrated how active researchers in those fields talk about their data (including common terminology and jargon), and reinforced which aspects of data management are most important for graduate students to learn. When asked about data management in current CLaSP courses, all of the faculty and graduate students responded that data management topics were not covered in classes they taught or had taken in the CLaSP department (respectively). A faculty member stated that "if there's a course exercise that involves the use of data, we go into how do you read data, how do you store data, that kind of thing. But [data management] hasn't been a specific organized part of the course that I taught." But both faculty and graduate students expressed an interest in their graduate students or themselves (respectively) taking a data management course. Another faculty member said "it's good to get [graduate students] thinking about [data management]. It's always at the bottom of our lists because there are so many other things to do. So I think getting the students thinking about [data management] early is a really good thing to do." A graduate student noted that "something like this [course] is valuable now and way into our careers" and "it would be a missed opportunity not to take [this course]." These comments show that the students were not receiving in-depth data management training and there is interest from both faculty and graduate students in this type of course.

In addition to asking questions about their research data and the data culture within their discipline, each interviewee completed a survey during the interview in which the nine data competencies were explained and then ranked their importance and current knowledge on a scale of one to five (1 = low importance or knowledge, 5 = very high importance or knowledge). (The survey instrument is reproduced in Appendix A.) Faculty responded to it based on the graduate students they work with, and graduate students responded based on their knowledge level. The graduate students' results were self-reported and not anonymous, so they may not be a completely accurate reflection of the importance or knowledge of data management topics within this department.

Impact on Course Development

The aggregate results of the interviews (including the survey results) were used to shape the course contents and materials. All interviewees stressed the vital importance of visualizations (both 2D and 3D) to the disciplines of climate and space science. Based on these responses, we dedicated an entire class to data visualization. We heard that netCDF is the most common file format used in both disciplines so we incorporated netCDF into several class sessions and activities. Additionally, climate and space researchers both generate their own research data as well as consume existing data sets (usually from federal agencies like NASA). We took this widespread data consumption into account by dedicating a class session to describing how to find data sets and be a good data consumer. Interviews also revealed the importance of data sharing within climate and space science, so an entire class session was dedicated to discussing this topic.

The interviews solidified which data competencies to address during the course and in which order they would be taught. Additionally, weekly course sessions were strengthened by including anecdotes and case studies from material gathered during the interviews and sessions at AGU's Fall Meeting; using real-life examples and true stories helped to emphasize the importance of each data competency.

Learning Objectives

After all of the preparatory activities, the course learning objectives were specified and used to guide subsequent course development, including finalizing the course syllabus. After completing this course, students will:

- Be able to write a detailed and comprehensive Data Management Plan (DMP).

- Be able to apply data management and stewardship concepts to their research.

- Know the community standards and best practices for data management in their specific field.

Marketing the Course

Because this was an experimental course, the marketing materials were specifically designed to give students as much detail as possible about the course so that they could make an informed decision before enrolling. The hope was that this transparency about the intent and purpose of the course would help to boost enrollment. First, we created a Google Document that contained the basic course information (number, title, credits, etc.), a brief course description, learning objectives, the textbook, a tentative weekly plan and a brief description of the two final projects.

Then we sent the contents of the Google Document via e-mail to the official CLaSP department mailing list, and posted print flyers in the Space Research Building, the home of the CLaSP department.

We also marketed the course during the in-depth interviews (described above). After briefly describing the course, faculty members were asked if they would encourage their graduate students to take this course, and graduate students were asked if they would be interested in taking this course. Overall, the response was positive from both the faculty and graduate students and helped build awareness that we would be offering the course during the Winter 2016 semester.

Teaching the Course

Logistics

While most CLaSP courses are three credits, interviewees indicated that a one- or two-credit course on data management would likely be more attractive to students. Due to the number of topics that needed to be covered in the course, a two-credit course, rather than a one-credit course, emerged as the ideal choice.

In total, five students enrolled in the course. Per the department's policies, there was no minimum number of students required. Of the five students, there were two Master's students and two Ph.D. students, all from the CLaSP department. The fifth student was a Master's student from the School of Information with an interest in scientific data management. Although a small class, the size facilitated active discussion among all students and instructors.

Course Format

Although it is important to teach students the theory and value of data management, we felt that it was critical that the students be able to apply what they learned through practical applications. This was reflected in a course format that combined readings, lectures, discussion, hands-on activities, and application of what they learned to their own data sets.

Students were assigned readings before class. The course text was Data Management for Researchers: Organize, Maintain, and Share your Data for Research Success by Kristin Briney. We selected this book as a recent (2015) and practical yet compact introduction to major data management topics. In addition to the textbook, students were assigned one to three other readings each week. The instructors wanted the course to be relevant to the students and their current research projects so they looked for data management readings related to the climate and space sciences. An example reading is the American Geophysical Union's Data Policy.

To maximize each session's impact and allow real application, the students designated a data set to use for this course at the beginning of the semester. Throughout the semester, they would be writing a DMP for this data set. All of the students were actively doing research and therefore able to supply their own data sets, although we were prepared to help students find a data set if they did not have their own. The benefit of writing a DMP for their own existing data set is that they were familiar with the data set and could focus on applying the data management lessons to it, rather than applying these lessons to an unknown data set in which they were not invested. Ideally, a DMP would be written before data collection occurs, but it is still beneficial for students to consider what steps they will need to take to manage, share, and preserve their data set even after they have started the project, and to adjust their practices if necessary. Another reason to have students write a DMP is to provide them with a template for future DMPs. The drawback of having students use their own data was that we did not have a set of ready answers to provide to them and we could not readily compare their work. However, as research projects and data management are often messy and distinctive processes, we believed that our students would learn more from being able to apply the class lessons and assignments to their own data.

Each class session was divided into three equal sections: presentation, discussion, and hands-on activity. The goal of the presentation portion of the class was to put the week's readings into the context of climate and space science. The presentations reviewed each of the week's readings, and provided basic information and best practices for the week's topic.

We emphasized discussion among the students throughout the course, because talking through data management issues with peers is a recommended technique for effective student learning on these topics (Gunsalus 2010). While each class session had a dedicated time for discussion, students were often prompted to start discussion during the presentation and hands-on activity portions of the class as well. The goals of discussion were to give students the safe space to share with fellow students about their current data management practices, question those practices, and think about ways to improve not only their personal practices but the practices and data management culture of their discipline. We found that our emphasis on peer learning was not only a vital part of the students' learning process but that it was an effective way for us to learn from our students as well. Because the students are so immersed in the climate and space disciplines, they were able to educate us on many nuanced aspects of research in these disciplines, such as learning how to write software code. Most importantly, because data management topics and best practices are still developing, discussion was meant to emphasize that students have an important voice in these ongoing conversations.

The final portion of each class session was usually a hands-on activity, intended to give students time during class to put the presentation information and discussion thoughts into action. Most activities involved exploring online resources, so students were asked to bring their laptops to class.

To illustrate an example session, during the week on Data Management Planning, the presentation covered the historical background and rationale for DMPs, current federal agency requirements, and the benefits and challenges of writing a DMP. During the discussion portion, students were asked to share their thoughts on DMPs in general (Do you agree with the rationale of having DMPs?) and their thoughts on DMPs for their chosen data set (What do you need to know to generate an effective DMP for your data set?). For the hands-on activity, students started a preliminary draft of a DMP for their data set that covered the five basic components required by the National Science Foundation and then completed the draft as their homework for that week. This hands-on activity allowed students to get feedback on their drafts from the instructors and peers before leaving class that day.

Weekly topics & Guest speakers

- Week 1: Course Introduction; What are Data?

- Week 2: Data Discovery and Being a Good Data Consumer

- Week 3: Data Management Planning

- Week 4: Data Storage Considerations and Costs (Guest speaker: IT Manager, CLaSP department)

- Week 5: Data Documentation and Organization

- Week 6: Metadata

- Week 7: Visualization (Guest speaker: Advanced Visualization Specialist, UM3D Lab)

- Week 8: Data Lifecycle and Workflows

- Week 9: Data Sharing

- Week 10: Data Curation and Preservation

- Week 11: Intellectual Property, Licensing and Attribution (Guest speaker: Copyright Specialists, U-M Library)

- Week 12: Data Ethics (Guest speaker: Director of Compliance and Policy, CoE)

- Week 13: Student presentations; Training on Deep Blue Data4 (Guest speaker: Data Curation Librarian, U-M Library)

- Week 14: Student presentations; Course Review

From the initial planning of this course, we recognized that we were not experts in all areas of data management and would need expert guest speakers for certain class sessions. Each guest speaker was given an overview of the course and asked to prepare a 40-45 minute presentation (with the remaining class time for discussion and questions). A total of five guest speakers were recruited both from the U-M Library and the CoE. In general, the guest lecturers had a large amount of leeway to present on their data management topic.

Grading

Students were graded on class participation, weekly reflections, weekly assignments, and two final projects: an in-class presentation and a comprehensive DMP. As discussion was an integral part of this course, students were expected to attend and fully participate in each class session. This expectation was explicitly stated in the syllabus and accounted for ten percent of a student's grade.

Reflections (20% of grade)

At the end of each class session, students had five minutes to write a personal reflection on that day's class. We set aside this time to give students a chance to consider the material and its impact on them personally. Williams (2002) noted that reflection is not often built into undergraduate engineering curricula, and the same can be assumed for graduate engineering education. As data management is a 'new' topic, we felt that it was important to build reflective time into this course. Written reflections also provided another means for students to express their thoughts on the week's data management topic if they were not comfortable speaking in front of the class.

We asked students to answer these three questions each week:

- What was the most important thing you learned today?

- What is one or more question(s) you still have about today's topic?

- How effective was today's session?

An additional question relating to the day's data management topic was also usually added to encourage additional critical thinking about the topic. In general, these reflections were one to two paragraphs in length. They were posted on the course's Canvas site (U-M's course management system) in a discussion thread. Instructors were able to see the reflections and respond to questions or provide feedback as necessary. The reflections also served to provide us with ongoing feedback from students about the course content. Because this was offered as a pilot course, we made every effort to adjust the course as necessary to meet the needs of the students. One limitation of this assessment method is that the reflections are not anonymous and are open to the entire class. Since students may not have felt comfortable expressing their true thoughts or questions in an open forum. The instructors always gave the students the option to e-mail their reflections privately.

Homework (20% of grade)

Students completed weekly homework assignments and turned them in via the Canvas course management system. Most of the weekly assignments asked the students to write a section of a DMP on that week's data management topic. Students were given several questions as prompts to assist with writing each section of their DMP. They were asked to answer all of the prompts and could include additional relevant information as needed.

These homework assignments were designed to give students practice articulating data management topics in writing; in this case, they were asked to articulate these topics in relation to their actual data sets. Grading was on a three-point scale with points deducted if prompts were not answered. Instructors provided one short statement noting the excellent parts of the DMP and one short statement noting the parts that need improvement. The grade and feedback were provided before the next assignment was due.

Final projects (Presentation 20% of grade, DMP 30% of grade)

Students were asked to complete two final projects: 1) give an in-class presentation and 2) write a comprehensive DMP.

Students gave a 15-minute presentation on a data topic of their choice, followed by five minutes for questions. The instructors gave students several example topics but the students had free rein to choose a topic that interested them, as long as it related to the course and had not already been presented. An example of a presentation topic was comparing and contrasting two data repositories.

We chose a presentation as a final project for several reasons. First, it gave the students another opportunity to learn from their peers. Second, the presentations gave the instructors a glimpse of the topics that the students were most interested in. Finally, on a more practical level, the presentations gave students the opportunity to practice their oral communication skills, which is a critical 'hard' skill. The presentations were evaluated on the topic (focus, relevant to the course, introduced new content, demonstrated knowledge and answered questions well) and quality (clear ideas, interesting and engaging, effective visuals, and clarity of speech).

The second final project was to combine their weekly homework assignments (DMP sections) into a single cohesive and comprehensive DMP. Instructors provided timely feedback on each homework assignment. The students were expected to incorporate this feedback into their final DMPs. The instructors graded the DMPs based on thoroughness, justification, organization, clarity, and feasibility.

We asked students to create a comprehensive DMP as their final project because it would provide them with a tangible and reusable product of the course. It also gave the instructors a way to assess whether students had met the learning objective of being able to write a detailed and comprehensive DMP. Most graduates of the CLaSP department become faculty members or researchers at federal agency labs, positions where they will need the ability to write required DMPs (Holdren 2016). They can use the DMP from this course as a starting point for DMPs for other data sets, in addition to applying it to their current research. One limitation of having students write a DMP for their existing data set is that this assignment does not mirror actual research workflows. DMPs are usually written before data collection. But we believe that introducing students to writing a DMP for a familiar data set will allow them to more easily write a DMP for a future, unknown data set.

Course Assessment

The instructors received permission from all five students to anonymously share their survey and reflection comments in this research article. In the request, we made clear that their decision would not affect their grade and results of post-course evaluations were not viewed until after grades were submitted.

Quantitative Assessments

Pre- & Post-Course Survey

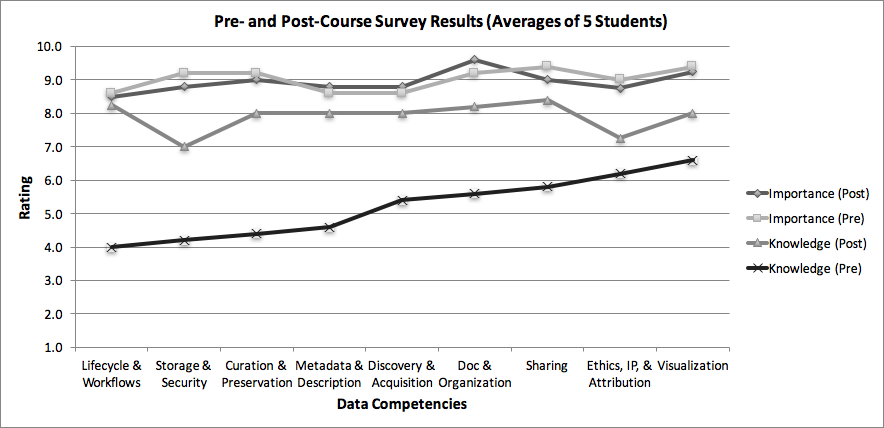

While the CoE evaluates its courses each semester, we needed to get more tailored and comprehensive feedback from students to help inform further initiatives at the U-M Library related to teaching data management. We asked students to complete both a pre- and post-course survey (which were very similar to the survey that interviewees completed during our Environmental Scan). Both surveys are reproduced in Appendices B and C. Shown in Figure 1, there is little variation in the students' ranking of the importance of the competencies between the pre- and post-course surveys, but students do report being more knowledgeable in all nine competencies after taking the course.

Figure 1. Comparison of pre and post-course survey results. (N = 5 students)

As with the interview surveys, these two surveys were self-reported and not anonymous. Additionally, completing both the pre- and post-course survey was part of each student's participation grade for the course. The instructors were not looking for specific answers, but rather that the students completed the surveys (and the instructions for both surveys stated this). Their specific answers had no effect on their grade, and we emphasized this to the students to encourage them to be as honest as possible when answering the survey questions.

Qualitative Assessments

Below are examples of student weekly reflections that illustrate their perception of the value of the session:

"Today's session was very efficient in helping me realize the bigger picture where Responsible Conduct in Research Seminars, Policy and Data Management all play important roles. These guidelines are not restricting the research we are doing, it is not a burden as proposal-writers always complain about. These guidelines help us get the most out of our research by regulating it and providing a path that is free of common repetitive mistakes." (Class session on data ethics)

"I think this session was quite effective in conveying the important aspects of data discovery and data consumerism. Once again, I really enjoyed hearing personal experiences and direct examples from my fellow students." (Class session on data documentation and organization)

In addition to asking students about their knowledge and importance of the nine data competencies in the post-course survey, they were asked several summative questions. Results of these questions are summarized below (Tables 2-5).

Table 2. Summary of student responses on course expectations.

| Is this course what you expected?* | Why or Why not? |

|---|---|

| Yes | |

| No | It was so much more than I expected. |

| No | I honestly didn't go into this course with any expectations, and I did that so that I would have an open mind. |

| Yes | This class is so useful for my future research and publication. I just started using many data in the research and I have not learned anything about data management before, so from this class, I learned a lot of knowledge about like data documentation and metadata, data preservation, data sharing, and especially data licenses and policies. |

| Yes, No | Expected the general content focus, but did not really know what to expect other than that |

| *Multiple answers were possible. |

Overall, the students reacted positively to this course (shown in Table 2). Because this was a new course, we did anticipate that the students would have mixed expectations, but we are encouraged by the students' honest feedback.

Table 3. Student responses of the most useful class session.

| What was the most useful session for you? | Please explain your answer. |

|---|---|

| Data Sharing | |

| Metadata | I finally realized why I hated working with other people's code or data and how can I make it less arduous for people that will work with me. |

| Data Documentation and Organization | I think documentation is very important, and I hadn't thought a lot about it before. |

| Data Ethics | I think we may make some mistakes when publication or acquisition from the website and other people. So the data policies are so important to learn thoroughly to help us avoid this. |

| Data Lifecycles and Workflows |

Interestingly, each student chose a different class session as the most useful class session (see Table 3). This result shows that data management is very personal and each student brings his or her own skills, knowledge and experiences to the class. This wide range of student experiences and familiarity with the subject matter created interesting discussions where the students shared their knowledge and experiences. For the instructors, however, this diversity was sometimes a challenge because it was difficult to know what level to teach at. As a result, we generally taught at an introductory level, which frustrated some of our students for certain topics, which we saw reflected in some of the post-course survey remarks.

Table 4. Student responses on the least useful class session.

| What was the least useful class session for you? | Please explain your answer. |

|---|---|

| Data Discovery and Being a Good Data Consumer | |

| What are data? | It was the introduction course and we got into details later on. |

| Visualization | I felt that I didn't learn anything new. |

| Visualization | This class is so interesting, but I think we all know many of visualization for making many presentations and learning from others' presentations. |

| Intellectual Property, Licensing and Attribution | Only because the content covered what I had already learned in the IP class previously, and did not cover enough data specific content. |

Even though an entire class was devoted to visualization as a result of the interviews, two students stated that this was the least useful topic for them. Based on the comments in the survey and during the class session itself, it appears that students receive a lot of training on visualizations during their CLaSP graduate studies. In future iterations, this class session could be reduced in length or refocused to better address more specific aspects of visualization not covered elsewhere in their training.

Being able to write a detailed and comprehensive DMP was one of the three course objectives. To assess if this learning objective had been met, we observed the students' final DMPs and their responses to one question on the post-course survey. The final DMP was not tied to a specific funding agency requirement. Instead it was meant to serve as a personal data management plan and to be more comprehensive and detailed than a plan submitted as a part of a grant application. We did not impose a page limit and the final products ranged from three to 26 pages. The instructors graded the DMPs based on thoroughness, justification, organization, clarity, and feasibility. The quality of the final DMPs was very high. In the post-course survey question on comfort with writing a DMP, on a scale of 1- 10 (1 = very uncomfortable, 10 = very comfortable), students reported an average of 7.8 (see Table 5).

Table 5. Student responses to their comfort in writing a DMP.

| On a scale of 1-10, how comfortable are you writing a DMP? | Please explain your answer. |

|---|---|

| 9 | |

| 9 | I feel comfortable writing a DMP but now I learnt how to not make it sloppy. I feel a bit overwhelmed with the burden. |

| 5 | I feel like I could write one, but it seems like the expectations are different for this course than they are in real life situations. Concise data management plans are more typical, but it didn't seem like the instructors wanted concise assignments. |

| 8 | I learned how to write a detailed DMP from this course, but I am still confused how to organize it. |

| 8 |

Based on these student comments, we would revise the class session of Data Management Planning in order to give students more concrete information about writing a DMP and to be more explicit about our intent. In the future, we may consider revising this project to a two-page limit so that it more accurately reflects federal funding agency requirements.

Additional student comments in the post-course survey noted that the readings could be improved. The textbook offered a general introduction to data management but was not specific to the climate and space sciences. As data management is still an emerging topic, we found it challenging to find applicable readings for the course from a climate or space scientist's perspective that would help the students further grasp the content. In future iterations, some of the more general readings could be revised or removed. As data management courses and case studies in engineering become more common, there will certainly be more readings available.

To summarize the post-course survey results, overall the students liked the course and would recommend that other graduate students take it. One student noted "this course is so useful for graduate student, especially PhDs."

Discussion

In summary, we feel that we successfully realized a safe space for the students to learn the basics of data management that are not often explicitly taught but are necessary to succeed in graduate school.

Overall, the instructors believe that all of the students met the three course learning objectives.

- Be able to write a detailed and comprehensive Data Management Plan (DMP): As previously discussed, the students did demonstrate their ability to write a satisfactory DMP.

- Be able to apply data management and stewardship concepts to their research: We were unable to directly assess whether the students have or will apply these concepts to their research. On the post-course survey, most students indicated that they will apply several concepts to their research.

- Know the community standards and best practices for data management in their specific field: Community standards and best practices were presented and discussed in almost every class session. The instructors were able to assess the students' knowledge of community standards and best practices by grading their homework assignments and by comparing the pre- and post-course survey results. These results are not definitive proof for students meeting this learning objective but it does give a positive indication.

Using the credit-bearing course model for data management education has several distinct advantages. First, a semester-long course allows for a comprehensive overview of data management topics. Shorter education opportunities, such as workshops or seminars, cannot cover the same breadth of topics, and do not necessarily give the students the opportunity to apply or practice what they've learned. Through the progression of the course, students see how these topics are connected and related. A credit-bearing course facilitates in-depth discussion and hands-on activities, which allows for peer learning. This model can also be scaled up to facilitate a larger class size by having students work and discuss in smaller groups. Finally, because students are being graded, they are likely to be more engaged and apply what they learned.

This model of data management education does have some limitations. First, we estimate that no more than half of the course materials could be easily transferred to another discipline, even within engineering. The course was purposefully tailored to address cultures, practices, and resources within the climate and space sciences.

Due to the nature of data management, there was overlap and repetition of some concepts (especially towards the end of the course). For example, a discussion about data curation and preservation (week 10) must include data storage (week 4). Our students commented negatively about the amount of repetition and we will need to be more cognizant of this for future offerings of this course.

The students enrolled in the course were at very different points in their graduate studies. Some were Master's students and others were Ph.D. students. Also, the Master's student from the School of Information did not have the same disciplinary knowledge as the other students. Due to their different points in their graduate studies, the students had very different data sets. Ideally, this type of course would be integrated into the graduate curriculum so that all graduate students took the course at a similar point in their graduate studies.

Finally, having only five students made some discussions a challenge. Having a greater number of students would have made discussion easier. We feel that a class size between 10 and 15 students would be optimum for this type of course.

The CLaSP department was happy with the student evaluations and would like to have the course taught again. For future iterations of the course, a different instruction model could be adopted in which a CLaSP professor co-teaches the course with an engineering librarian or data services librarian. If that model is not possible, a professor could be invited to give a guest lecture on their own data management practices so that students can see how data management is actively being practiced in their own department. Since much of the material covered in the course is theoretical, it would be powerful for students to hear how faculty put these theories into practice.

Additionally, we recognize that this course required a significant time investment that may not be feasible at other institutions. Teaching this course had very strong administrative support in our library. In order to become more integrated into the graduate curriculum, the library administration recognized that the results of a pilot course would be necessary to convince other departments to offer this type of training. To allow us time to develop, design and implement this course, some of our other duties were lessened, which may not be an option at other institutions. However, many librarians have shared their teaching materials online for other data management course and workshops. Other librarians could easily re-use these materials if they cannot devote time to creating their own.

Finally, while our course only directly reached five students, we hope that they will share their training with other students, faculty, and collaborators. As was noted during our in-depth interviews, many researchers develop their data management practices and habits by learning from peers.

Lessons Learned

After assessing and reflecting on this course, we offer the following lessons learned for creating data management education in engineering:

- Assembling a diverse instruction team brings unique strengths and experiences. Each instructor brought different experiences with engineering, data management, and STEM graduate school.

- If not already a member of the faculty, find a faculty champion within the target department. They can provide connections and legitimacy for your course.

- Consider multiple ways of providing data education. If a new course is not possible, consider working with faculty to add explicit data management concepts and skills into existing courses or give a guest lecture on data management. Creating a workshop or seminar series is another option to help faculty and graduate students learn about data management.

- Due to the diverse nature of data management, the instructors cannot be experts in all of the concepts. Recruit knowledgeable guest speakers or lesson consultants in the areas you are least comfortable with. Most colleagues are happy to lend their expertise.

- Connecting data management education to accreditation standards could be a useful way to 'sell' this type of education to a specific department and help to ensure that relevant critical thinking skills and learning objectives are integrated into course lectures and activities.

Conclusion

As expectations for researchers to do more with their data increase, students need to develop knowledge and skills about how to successfully manage their data. This course was an early exploration in how data management topics could be taught to graduate students through the lens of a specific discipline. Responses from students indicate that the course was largely successful as a pilot, though some areas for consideration and improvement were indicated. Both the CLaSP department and the U-M Library believe that their collaboration on this course was a fruitful partnership and one that should continue. The CLaSP department has invited the Library to offer a credit-bearing course again in 2017. CLaSP is also interested in working with the Library on developing other models and venues for teaching of data management topics to graduate students, such as developing online modules that could be inserted into existing courses.

Acknowledgements

The authors did not receive external funding for this course, but the instructors were paid a stipend for teaching the course. The authors would like to acknowledge the following colleagues for their insightful and detailed guest lectures: Faye Ogasawara, Ted Hall, Melissa Levine, Justin Bonfiglio, Elizabeth Wagner, and Amy Neeser. Additionally, these colleagues provided insights into data management and were consulted during course development: Matt Carruthers, Lance Stuchell, Eric Maslowski, Jennifer Green, and Karen Reiman-Sendi. Within the Climate and Space Sciences and Engineering Department, the authors thank Justin Kasper and Mike Liemohn for being the faculty supporters of the course as well as the additional faculty members and graduate students that were interviewed in preparation for this course. Finally, the authors thank the students who were brave enough to take this experimental course.

Notes

1 Other credit-bearing courses taught by U-M librarians in partnership with academic departments are information literacy courses for undergraduates, library science classes, or in-depth graduate-level research classes in the humanities.

2 More information about the DIL Project and their supporting materials can be found at the DIL Project web site (http://www.datainfolit.org/) and the DIL Case Study Directory (http://docs.lib.purdue.edu/dilcs/).

3 AGU Fall Meeting. http://fallmeeting.agu.org/2015/

4 During Week 13 of the course, students were trained on how to use Deep Blue Data, the U-M Library's data repository that launched in February 2016 (https://deepblue.lib.umich.edu/data).

References

ABET. Criteria for Accrediting Engineering Program, 2016-2017. Criterion 3: Student Outcomes. http://www.abet.org/accreditation/accreditation-criteria/criteria-for-accrediting-engineering-programs-2016-2017/#students

Adamick, Jessica, Reznik-Zellen, Rebecca C. & Sheridan, Matt. 2013. Data management training for graduate students at a large research university. Journal of eScience Librarianship 1 (3). doi:10.7191/jeslib.2012.1022

Borgman, Christine L. 2008. Data, disciplines, and scholarly publishing. Learned Publishing 21 (1): 29-38. doi:10.1087/095315108X254476

Carlson, Jake, Fosmire, Michael, Miller, C.C. & Nelson, Megan Sapp. 2011. Determining data information literacy needs: A study of students and research faculty. portal: Libraries and the Academy 11 (2): 629-657. DOI:10.1353/pla.2011.0022

Carlson, Jake & Stowell-Bracke, Marianne. 2013. Data management and sharing from the perspective of graduate students: An examination of the culture and practice at the water quality field station. portal: Libraries and the Academy 13 (4): 343-61. doi:10.1353/pla.2013.0034

Corti, Louise, and Eynden, Veerle den. 2015. Learning to manage and share data: Jump-starting the research methods curriculum. International Journal of Social Research Methodology 18 (5, SI): 545-59. doi:10.1080/13645579.2015.1062627

Federer, Lisa M, Lu, Ya-Ling & Joubert,Douglas J. 2016. Data literacy training needs of biomedical researchers. Journal of the Medical Library Association 104 (1): 52-58. doi:10.3163/1536-5050.104.1.008

Frugoli, Julia, Etgen, Anne M. & Kuhar, Michael. 2010. Developing and communicating responsible data management policies to trainees and colleagues. Science and Engineering Ethics 16 (4, SI): 753-62. doi:10.1007/s11948-010-9219-1

Gunsalus, C.K. 2010. Best practices in communicating best practices: Commentary on: 'Developing and communicating responsible data management policies to trainees and colleagues.' Science and Engineering Ethics 16 (4): 763-67. doi:10.1007/s11948-010-9227-1

Hanson, Brooks & van der Hilst, Robert. 2014. AGU's data policy: History and context. Eos Transactions 95(37): 337. doi:10.1002/2014EO370008

Holdren, John. 2016. Letter to the Senate and House Appropriations Committees. Office of Science and Technology Policy. http://web.archive.org/web/20161021093653/https://www.whitehouse.gov/sites/default/files/microsites/ostp/public_access_report_to_congress_apr2016_final.pdf

Johnston, Lisa & Jeffryes, Jon. 2014. Data management skills needed by structural engineering students: Case study at the University of Minnesota. Journal of Professional Issues in Engineering Education and Practice 140 (2). doi:10.1061/(ASCE)EI.1943-5541.0000154

Tenopir, Carol, Hughes, Dane, Allard, Suzie, Frame, Mike, Birch, Ben, Baird, Lynn, Sandusky, Robert, Langseth, Madison & Lundeen, Andrew. 2015. Research data services in academic libraries: Data intensive roles for the future? Journal of eScience Librarianship 4 (2): e1085. 10.7191/jeslib.2015.1085

Whitmire, Amanda L. 2015. Implementing a graduate-level research data management course: Approach, outcomes, and lessons learned. Journal of Librarianship and Scholarly Communication 3 (2): eP1246. doi:10.7710/2162-3309.1246

Williams, Julia M. 2002. The engineering portfolio: Communication, reflection, and student learning outcomes assessment. International Journal of Engineering Education 18 (2): 199-207. http://www.ijee.ie/previous.html

Wright, Sarah J., Carlson, Jake, Jeffryes, Jon, Andrews, Camille, Bracke, Marianne, Fosmire, Michael, Johnston, Lisa R., Nelson, Megan Sapp, Walton, Dean & Westra, Brian. Developing data information literacy programs: A guide for academic librarians. In Carlson, J. and Johnston, L.R. Data Information Literacy: Librarians, Data and the Education of a New Generation of Researchers. West Lafayette (IN): Purdue University Press; 2015. p.206-230.

Appendix A. Interview Survey Instrument

Interview Survey Instrument (PDF)

Appendix B. Pre-Course Survey

Pre-Course Survey (PDF)

Appendix C. Post-Course Survey

Post-Course Survey (PDF)

| Previous | Contents | Next |

This work is licensed under a Creative Commons Attribution 4.0 International License.