Electronic Resources Reviews

Dimensions

Brian C. Gray

Team Leader Research Services & Research Services Librarian for Engineering

Kelvin Smith Library

Case Western Reserve University (CWRU)

Cleveland, Ohio

bcg8@case.edu

Introduction

On January 16, 2018, Digital Science launched a new product called Dimensions. Dimensions is designed as a single platform that supports discovery through publication, and even on to application of that research. Dimensions tries to bring full scope to research under one umbrella of tools by bringing together data from many sources, including articles, funded grants, clinical trials, and patents. The portion that is the free citation index is comparable to Web of Science (Clarivate Analytics), Scopus (Elsevier), or Google Scholar. The subscription portion looks to add on functionality as seen in InCites (Clarivate Analytics) or SciVal (Elsevier), and potentially even more.

Content

Dimensions currently includes:

- 90+ million publication records,

- almost 3.7 funded grants from 250 global funders (representing $1.3 trillion),

- 380,000+ clinical trials,

- almost 35 million patents,

- approximately 9 million records with Altmetric data,

- just over 128 million documents (with over 50 million available by full text searching), and

- approximately 4 billion connections between items.

Within Dimensions, language processing and machine learning allow for classification by research topic. The major benefit is that grants, clinical trials, and patents are grouped with related publications. In order to accomplish this style of organization, Dimensions leverages systems with broad coverage and available training materials. Classifications based on the Fields of Research (FOR) from Australia, the New Zealand Standard Research Classification (ANZSRC), the Research, Condition, and Disease Categorization (RCDC) from U.S. National Institutes of Health (NIH), and the Health Research Classification System (HRCS) from UK biomedical funders. The classifications, while the terminology looks familiar to those used in other databases or familiar to U.S.-based researchers, result in some types of research being categorized in some unfamiliar ways. Several items discovered in the results are surprising in their subject assignments. Fields of Research are browsable. Institution name disambiguation is managed by Digital Science's GRID database that contains over 70,000 institutions. GRID is also used by ORCID. Person disambiguation focuses on precision rather than recall, and Digital Science announced that users should expect improvements in this area over time.

Citation data is gathered from several sources. In addition to gathering data directly from 100+ publishers, other sources such as CrossRef, PubMed Central, and Open Citation Data are integrated. Other sources are expected in the future, such as arXiv or ChemRxiv.

Searching Capabilities

This review will focus specifically on the free publication citation data that researchers have access to now at https://app.dimensions.ai. Other free tools, but not explored in this review, are the usage of a metrics API or the "badge builder." The paid offerings were not explored in this review. All of the tools are in "beta," so changes can be expected over time.

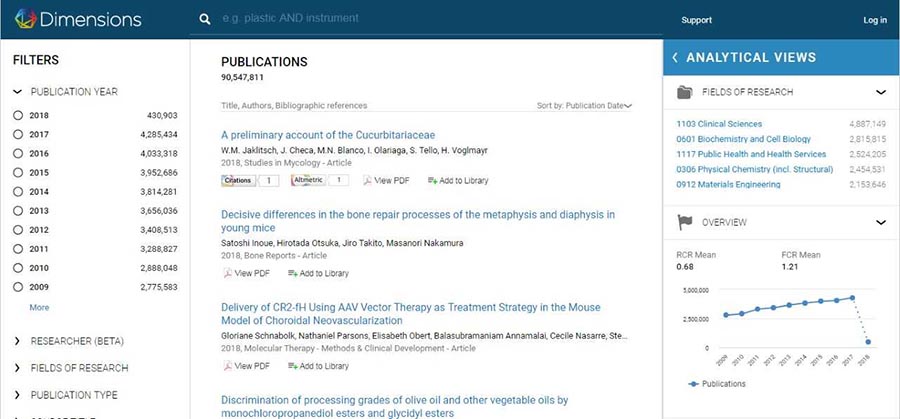

The initial search screen resembles many of the traditional database platforms with a single search screen, but it comes preloaded with all results down the central column. Having all results displayed makes it easy to browse and see the true scope of the Dimensions content. Overall, the display is clean, modern, and easy to view. It looks similar to Scopus in appearance. The brief display of references provides basic citation data, citation counts, Altmetric ranks, full text linking through ReadCube (plus, ability to save to a personal library), and links to open access. The left column provides many traditional filters, such as publication year, researcher (author), fields of research, publication type, source title, journal list (such as PubMed or DOAJ), and if something is considered open access. The right column provides a brief view of what Dimensions calls "Analytical Views" with visual displays of the top fields of research, researchers, publication counts, and source titles. Sorting of the results list is done by relevance, date of publication, Relative Citation Ratio (RCR), citations, and Altmetric score. RCR as defined by Dimensions is: "a citation-based measure of scientific influence of a publication. It is calculated as the citations of a paper, normalized to the citations received by NIH-funded publications in the same area of research and year." A feature not yet working, at least in the free version, appears to be a future account management tool as represented by a "log in" link. No alerting features or type-ahead search suggestions appear to exist at time.

Figure 1 Dimensions Opening Screen

Currently missing is a formal advanced search screen. All searches are completed from a single search box that can be limited to title and abstract versus full data. An "abstract search" allows a user to paste an abstract. On several attempts testing the abstract search, the correct article was listed first and the following articles were in some way related. Boolean operators can be used by using all capital letters for AND, OR, and NOT. Phrases can be searched by using quotation marks, and strategies made complex through parentheses. American and English/British spellings are included.

Searching can be awkward as it may require a user to think of new searching techniques or take extra steps not utilized in other databases. For example, searching for an author's name retrieves articles by that person and articles that cite that person. To limit to the articles authored by that person, a user needs to use the filter for "researcher" after getting the initial results list. Surprisingly on the next results screen, a simple author profile appears with institution, city, and citation count.

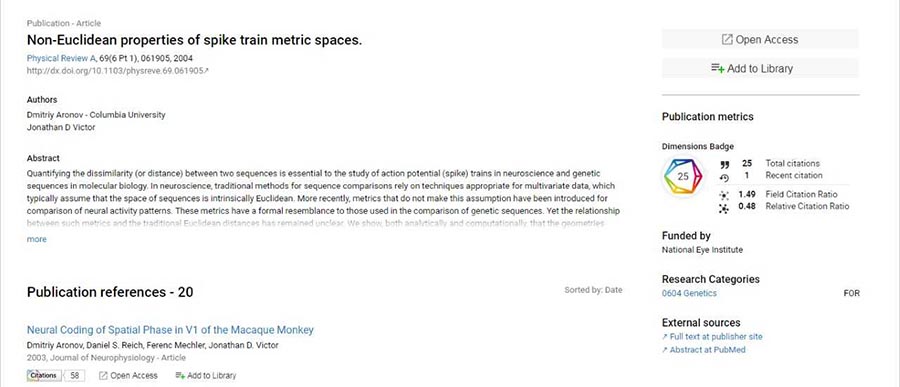

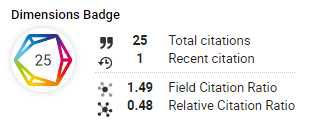

Clicking through to a publication record shows a traditional output. The record includes content title, publication data, DOI, authors (including organizations), and abstract. It also provides a hyperlinked list of references, list of citations, list of patent citations, and linked clinical trials. A sidebar includes a "Dimensions Badge", Altmetric visualization and data, clickable research categories, funding agencies, and links to external sources (full text at publisher site, open access, and/or PubMed abstract). The Dimensions Badge documents total citations, recent citations, field citation ratio, and relative citation ratio. Missing is any formal mention of a permalink, but the URLs for records tried appear to be permanent. Other filters missing include language of publication and country of origin. From the publication record, there is no ability to click on an author's name to find more by the same author.

Figure 2 Dimensions Publication Results Page

Figure 3 Dimensions Badge

Outputs Compared to other Services

Looking more specifically at the content, the publication types discovered included articles, chapters, proceedings, monographs, and preprints. Records are currently in various stages of completeness as abstracts and other data are missing in some records. Some records are non-English, with no translation capabilities embedded in the platform. The earliest records are 256 items from 1665, with many from the Philosophical Transactions of the Royal Society. For approximately the last decade, about 2.5 to 4 million records exist for each year.

Sample searching to show variations and amount of content in Dimensions, Scopus, and Web of Science were conducted.

| "Sample Searches with Resulting Publication Counts (articles only in parentheses) | |||

|---|---|---|---|

| Search Strategy | Dimensions | Scopus | Web of Science (Core Collection) |

| climate change | 3,101,848 (2,354,906) | 277,040 (206,069) | 250,404 (207,079) |

| "climate change" | 1,115,549 (870,571) | 211,357 (151,304) | 179,588 (146,143) |

| subject = chemical engineering | 283,670 (251,327) | 2,671,696 (2,093,688) | 926,319 (658,970) *Web of Science Category used |

| Author: David Schiraldi (colleague at CWRU in macromolecular science) | 135 (129) | 227 (174) | 306 (169) |

| source = Nature | 409,325 | 317,597 | 247,463 |

| publication date = 2017 | 4,285,434 (3,530,972) | 2,834,329 (1,858,035) | 2,570,064 (1,792,567) |

Overall, the results returned during various sample searches are as expected. Repeating searches of past explorations, I do also see the type of content I would expect or even recommend to researchers. What I cannot tell, and I would not even guess, is how the relevancy ranking currently works. The Dimensions Support Center offers no insights on that topic.

Conclusions

In its infancy, Dimensions shows strong potential in scope and breadth of content. The design-from-scratch approach seems to bring together the strengths of a traditional citation index with those of a modern discovery service. Some common features are missing, but as a free tool I saw nothing that would prevent me from recommending it to a researcher. It is worth watching the development of Dimensions and how it might send waves through the library database landscape. The paid analytic tools and features will be worth exploring over time as well.

| Previous | Contents | Next |

This work is licensed under a Creative Commons Attribution 4.0 International License.