| URLs in this document have been updated. Links enclosed in {curly brackets} have been changed. If a replacement link was located, the new URL was added and the link is active; if a new site could not be identified, the broken link was removed. |

Seeing the Forest of Information for the Trees of Papers: An Information Literacy Case Study in a Geography/Geology Class

Linda Blake

Science Librarian

West Virginia University Libraries

linda.blake@mail.wvu.edu

Tim Warner

Professor of Geology and Geography

Department of Geology and Geography

tim.warner@mail.wvu.edu

West Virginia University

Morgantown, West Virginia

![]()

Abstract

After receiving a mini-grant for developing integrated information literacy programs, a Geography/Geology Department faculty member worked with the Science Librarian to embed information literacy in a cross-listed geology and geography course, Geog/Geol 455, Introduction to Remote Sensing. Planning for the revisions to the class started with identification of five key literacy standards for the discipline of remote sensing. Each standard was addressed through a combination of teaching, assignments and measurements. From this planning exercise, two lectures and two laboratory exercises on information literacy were developed. Student response to the information literacy material, as measured through pre- and post-course surveys, was overwhelmingly positive. The project also provided an important opportunity for collaboration between the scientist and librarian.

Introduction

In the spring of 2009, the West Virginia University (WVU) Libraries offered five grants through a program called Information Literacy Course Enhancement Program (ILCEP). The grants, managed by the Libraries' Director of Instruction and Information Literacy and offered to teaching faculty, were intended to encourage the eventual campus-wide integration of information literacy across the curriculum. The foundation for the grants and the University Libraries' information literacy program was the University's 2010 Plan (West Virginia University 2005). Goal 3 in the plan, "Enhance the Educational Environment for Student Learning," had as one of its key indicators "Develop integrated programs that foster increased writing and information literacy across the disciplines."

One of the authors (Warner), a professor of geography and geology, received one of the grants in support of the incorporation of systematic information literacy teaching in the cross-listed course, GEOG/GEOL 455, Introduction to Remote Sensing. Remote sensing is the study of the earth and its environment using satellite and aerial images. The WVU Science Librarian (Blake) collaborated with him on the grant, building on a foundation of five years of previous single 50-minute instruction sessions on literature search methods for that class.

The class enrollment in prior years was typically about 30 upper division undergraduates and graduate students. One of the stated learning outcomes of the class was that students were expected to become familiar with the scientific literature as a way to keep up with the rapidly changing field of remote sensing. Because the students typically came from a wide range of disciplines across the university, including the environmental sciences, social sciences, and humanities, the emphasis on exploration of the scientific literature was geared to encourage students to investigate the relevance of the field of remote sensing in their particular disciplines. To this end, students were asked to review five scientific papers at the start of the semester, and also write an end-of-term major research paper. The papers reviewed by the students, and the term paper topics, were left entirely up to the students to choose, though the term-paper topic had to be approved by the instructor. To select their review papers and supporting literature for their term papers, students used a variety of methods, such as random searches through journals and systematic database searches.

During the limited library instruction the students had received previous to the grant, Blake taught the students how to search important databases in the geosciences including GeoRef and GeoBase. She also demonstrated how to use the Web of Science to identify articles citing a key article. Since remote sensing is a technical field, she also taught them how to use Engineering Village. During the instruction in the year immediately prior to the grant, Blake attempted to teach the students how to build online reference databases using RefWorks, but quickly discovered that this was too much for a fifty-minute session. Library instruction occurred during the first few days of class because the students were expected to begin reviewing papers in the second week of class.

In this paper, we provide background on the work leading up to the Fall semester when the class was offered. We discuss specific teaching activities and present an assessment of the effectiveness of the integration of information literacy into the class.

Planning and Preparation

Planning for the revisions to the class began in the summer, prior to the start of the new academic year. A literature review was undertaken to help us understand information literacy concepts, find practical classroom activities, and select assessment tools. Dechambeau and Sasowsky's (2003) work with information literacy in the geosciences was very helpful in identifying specific information literacy needs for students studying remote sensing. An article addressing information literacy in geography (Kimsey and Cameron 2005) was less useful in that it had more of a social sciences orientation. From a practical standpoint, Teaching and Marketing Electronic Information Literacy Programs (Barclay 2003) provided tips on lesson planning and slides on various information literacy topics. Also, Teaching Information Literacy: 35 Practical Standards-based Exercises for College Students (Burkhardt, et. al. 2003) provided pertinent active learning exercises. A graphic of the scholarly information process and more methods for teaching were found in Teaching Information Literacy in Specific Disciplines (Ragains 2006). Three sources were useful in developing assessments. The assessment instruments from Assessing Student Learning Outcomes for Information Literacy Instruction in Academic Institutions (Avery 2003) helped us articulate expectations for student assignments, and some of the measures were incorporated into a grading rubric in the syllabus. We modified a web page evaluation form from A Practical Guide to Information Literacy Assessment for Academic Libraries (Radcliff et. al. 2007). Additional examples of assessments were found in Information Literacy Instruction Handbook (Cox 2008).

The Association of College and Research Libraries (ACRL) standards (Association of College and Research Libraries 2000), as well as Schroeder's (2004) information literacy matrix, provided the theoretical framework for our planning. Schroeder's matrix, developed for students at Portland State University, is a visual means of displaying the area of information literacy and the level of expertise expected of students for each area (Schroeder 2004). Drawing on past-experience with our class and our readings (Dechambeau and Sasowsky 2003; Ragains 2006), we assessed the information needs of the students. We determined that the skills needed by the students incorporated all levels of those in Schroeder's matrix: foundational; discipline-specific; expert; and intermediate, even though the students were at the upper level of undergraduate work or doing graduate work. We created a chart mapping the standards and their outcomes to the teaching needed to help the students meet the outcome; the assignments necessary to give students practice; and the final assessment of whether the students had met the standard (Table 1). Our working chart included the five ACRL standards, the ACRL performance indicator, and the related ACRL outcome. We also included selected areas and the associated levels from Schroeder's (2004) matrix. We defined how we would help students reach the desired outcomes through the implementation strategy, which included teaching, assignments, and measures for evaluating the students' success with meeting each of the standards.

Table 1. ACRL standards (abbreviated due to space considerations) mapped to assignments

| ACRL Standarda | Schroeder Matrixb | Implementation | |||||

|---|---|---|---|---|---|---|---|

| # | Description | ACRL Performance Indicator | Area | Level | Teaching | Assignment | Measurements |

1 |

Determines the nature and extent of the information needed |

Identifies a variety of….potential sources of information |

Resource evaluation Skills |

Expert |

The scholarly production of information in the geosciences |

Student independently selects articles for review |

Articles chosen are relevant, appropriate |

2 |

Accesses needed information effectively and efficiently |

Selects the most appropriate investigative methods or information retrieval |

Thinking critically & reflecting on the research process |

Intermediate |

GeoRef, GeoBase, Web of Science, and other databases |

Student independently selects articles for review |

Articles chosen are relevant, appropriate |

3 |

Evaluates information and its sources critically |

Summarizes the main ideas to be extracted |

Thinking critically & reflecting on the research process |

Foundational |

Refinement of research topic and selection of keywords |

Term paper with formatted bibliography |

Provides comprehensive overview of the material |

4 |

Uses information effectively to accomplish a specific purpose |

Uses new and prior information |

Ethical and legal use of information |

Intermediate |

Plagiarism issues and citation techniques |

Term paper with formatted bibliography |

Summarizes ideas effectively |

5 |

Understands…. economic, legal, and social issues…. of information and access, ... uses information ethically and legally |

Acknowledges the use of information sources |

Ethical and legal use of information |

Discipline specific |

How to use RefWorks and lecture on plagiarism |

Term paper with formatted bibliography |

Uses citations and bibliography correctly |

|

aAssociation of College and Research Libraries (2000) |

||||||

Having developed a framework of general aims and implementation strategies, and specified anticipated learning outcomes for the course syllabus, we created an outline detailing the content we expected the students to assimilate. This approach helped us narrow in on what we wanted students to know about the information milieu for the geosciences; how that information is created, used, organized, and presented; and what information literacy skills are needed to accomplish these tasks. We also added assignments and pre-and post-surveys to facilitate an evaluation of the effectiveness of the planned changes to the course.

Information Literacy Teaching

Following the structure of the class, which consisted of a two-credit-hour lecture session and a one-credit-hour laboratory, with the latter having three contact hours, we decided to develop two new one-hour lectures for presentation in the regular classroom and two laboratory sessions to be conducted in the library's computer-laboratory (Table 2).

Table 2. Overview of the information literacy instructional components used.

| Instructional approach | Activity |

|---|---|

Lectures |

Focusing a research question and avoiding plagiarism |

The research publication culture |

|

Laboratory exercises |

Developing a topic, identifying academic dishonesty, using databases |

Citation management (RefWorks/Write-N-Cite) and evaluating web resources |

|

Paper reviews |

Students use literature search skills to choose and review 5 scholarly papers. |

Term paper |

Students write a major term paper, employing appropriate citation conventions |

The first of the two lectures was on the creation and use of information in the geosciences, and specifically in remote sensing. The rationale for including this material was to help the students understand the context in which scientific information is produced and disseminated. It was our hypothesis that an understanding of the sociology and economics of scientific publishing can help students appreciate the importance of characteristic features of scientific literature such as the peer review process and journal rankings. The lecture included the topics of: the role of publishing in a scholar's career; trends in publishing, including the changes associated with the move from paper to electronic journals; features of scholarly articles in the geosciences; the peer review process; and information sources. The discussion of information sources was designed to help students understand the nuances of the concept of journal quality, as well as how scientists regard non-peer-reviewed material such as general Internet sources, the popular press, and gray literature. Warner presented this lecture.

The second lecture, presented by Blake, focused on developing a research topic and academic dishonesty. To illustrate the process of developing a research topic, Blake showed the students a series of examples. She gave the students an exercise using a grid adapted from Burkhardt et. al. (2003). The exercise presented four sample topics narrowed to a research question. The students then completed their own grids of broad topic(s), restricted topic(s), narrowed topic(s), and research question(s). Together with the class graduate assistant, we guided students as they worked individually through the definition of a focused research topic.

Once the students had identified a well-defined research question, the class discussion turned to the creation of a research statement based on keywords in the research question. The wide range of limiters that vary across databases, such as Boolean operators, truncation symbols and wildcards, were explored and summary guides for the databases were handed out.

Finally, the topics of plagiarism, academic dishonesty, and University sanctions for cheating were explored, along with the writing of summaries, paraphrasing, and the culture in the physical sciences of generally avoiding direct quotes. A distinction was made between common knowledge, for which citations do not need to be provided, and specialized knowledge for which citations are required. A reinforcement activity consisted of a series of scenarios which the students were asked to evaluate with respect to plagiarism. The students held up a green piece of paper if they agreed that a citation was needed or a red piece of paper if they thought it was not. For example, students were asked whether it was necessary to provide a citation for the statement "About half of the world's rain forest is in the Amazon Basin." As the students held up either a green piece of paper or a red piece of paper, Blake and Warner could visually identify the number of students who believed a citation was needed, and call on students to defend their opinions.

Blake met with the students two more times after the lecture in their classroom. For those sessions, the class came to the library's laboratory for hands-on instruction. The first session concentrated on searching remote sensing topics in GeoRef; how to import references into RefWorks; and searching GeoBase and Engineering Village. During the second laboratory session, students were taught how to use Web of Science with concentration on citation searching using a key article to search backward in time with cited sources and forward in time with citing sources. Also during the second session, Blake taught how to evaluate Internet resources. Students were given a rubric to evaluate pre-selected web pages and divided into groups to examine the pages. They reported to the class their conclusions regarding the usefulness of their web site for research in the remote sensing class. Finally, students practiced using Write-N-Cite to insert references from RefWorks while composing papers.

Assessment

Ideally, we would have had a control group to compare against the students who had the information literacy instruction. In the absence of a control, we evaluated the effectiveness of the information literacy efforts through surveys of the participating students before and after the class (Appendices 1 and 2). Of the 33 students in the class, 30 students completed the pre-course survey, and 23 students completed the survey after the course was completed. The pre-course survey had only multiple-choice questions; the post-course survey had both multiple choice questions and open-ended questions borrowed from Cynthia Comer's assessment of sociology students (Comer 2003).

In the pre-course survey, students were asked to evaluate their information literacy background and skills in terms of a ranked list of descriptors. In cataloging their previous exposure to information literacy training, the single-most commonly chosen category was training in using computer databases (87%). In comparison, plagiarism instruction was the least chosen category (47%).

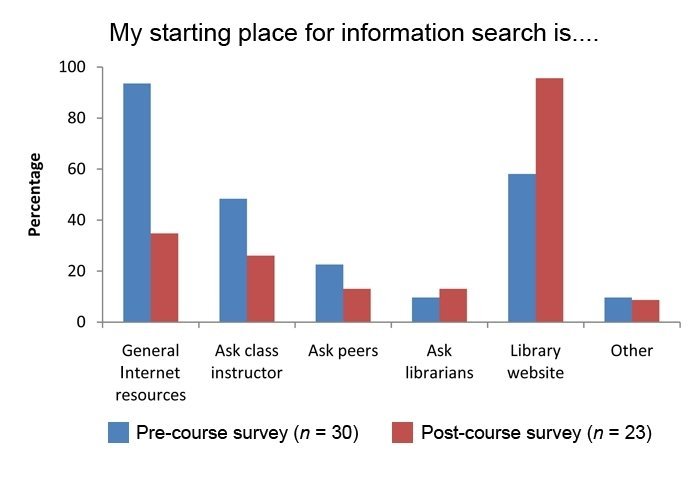

In comparing student evaluation of their information literacy skills before and after the class, the question for which the student response showed the greatest change was the one which asked where the respondent first started a research or term paper (Figure 1). Prior to the course, 97% of students listed general Internet resources as among their first locations, and only 47% included the library's web site (multiple choices were permissible). After the course, general Internet web sites were included by only 35% of students, and the library's web site was selected by almost every student (96%).

Figure 1. Graph of pre- and post-course survey results: My starting place for information search is….

Student confidence in using electronic databases and finding good sources increased notably following the course. Coding the students responses on a 1-5 scale (1 = least confident; 5 = most confident), student responses increased from 4.0 and 4.1 to 4.3 and 4.5, respectively. Notably, before the course, 17-20% of students were neutral to unsure of their capabilities in these areas, after completing the course, 0-4% chose these categories.

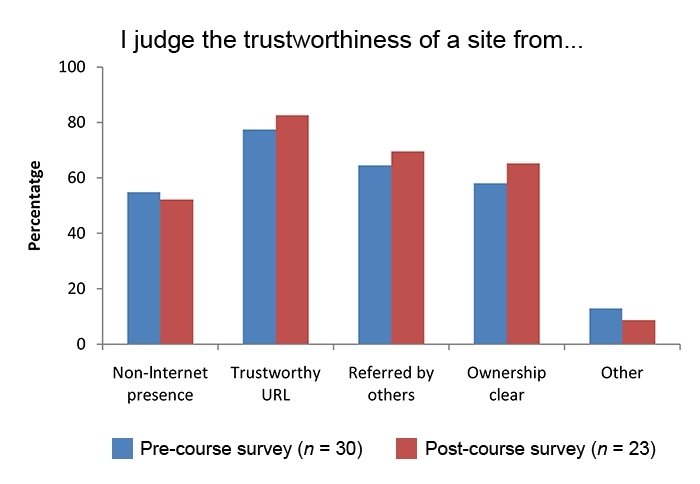

The question that indicated the smallest change in student skills related to the methods used in evaluating the trustworthiness of web sites. Figure 2 shows the percentage of students who selected each method listed (multiple selections were acceptable, and thus the answers total greater than 100%). Both before and after the course, students evaluated web sites primarily based on the credentials of the organization. This finding suggests that these students, who were mainly seniors and graduate students, have well-developed skills for evaluation of general Internet resources. In addition, searching the scientific topic of remote sensing on the Internet results in a limited number of sites, almost all of which are easily categorized as either credible or not. As instructors, we were even challenged to find questionable web sites for the web site evaluation activity.

Figure 2. Graph of pre- and post-course survey results: I judge the trustworthiness of a web site from…

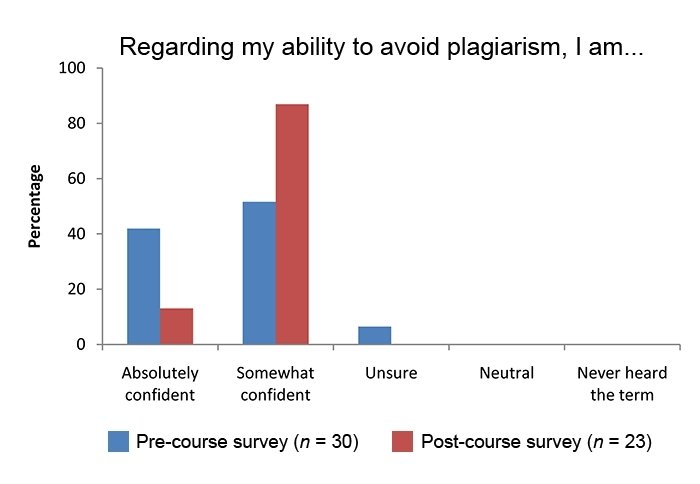

The question on plagiarism showed an interesting change (Figure 3). Prior to the course, 43% described their confidence in being able to avoid plagiarism as "absolute" (i.e., total), and 7% were unsure as to what plagiarism was, or how to avoid it. The remainder (53%) chose the middle option of "somewhat confident, but I have always had a bit of a concern." Following the course, both extremes declined. No student chose the option of "unsure" and those selecting "absolute" confidence fell to only 13%. In contrast, the percentage selecting the more cautious option increased 34% to 87%. At first this may seem a negative outcome in that the number of students registering total confidence declined, but we interpret this to be a positive outcome. Prior to the course, students appeared to have a very simple notion of plagiarism; after the course, students appeared to be much more aware of the challenges of avoiding plagiarism.

Figure 3. Graph of pre- and post-course survey results: Regarding my ability to avoid plagiarism I am... The average response based on a coding of the categories on a scale from 5 (strongly agree) to 1 (strongly disagree) was 4.4 before the course, and 4.1 after the course.

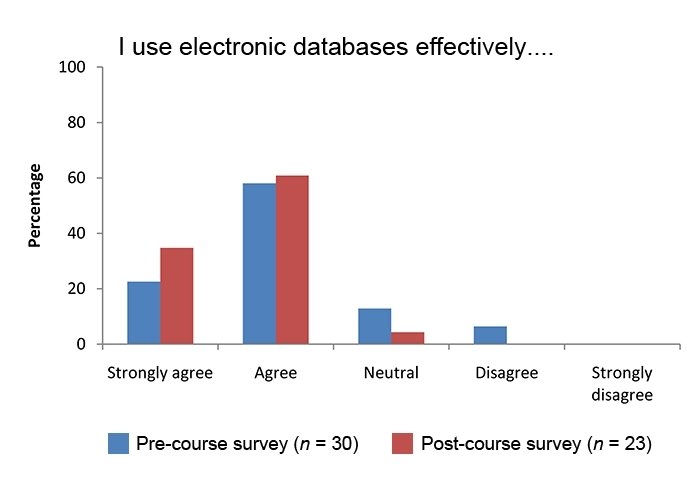

The before and after comparisons of student skills were strongly supported by the answers to a series of questions that probed student perceptions of how their skills had changed as a direct result of the information literacy component of the course. These questions all included a request to describe their agreement with a statement on a scale that included "strongly agree," "agree," "neutral," "disagree," and "strongly disagree." If these responses are coded respectively on a scale from 5 to 1, the average response for all questions focusing on how the course had benefited the responder ranged from 4.3 to 4.7 out of 5.0. Students asserted that they were more efficient at retrieving results from electronic databases (4.3/5.0); that the information would be useful in other courses (4.7/5.0); that they had applied the skills in other course (4.5/5.0); that they felt better equipped to evaluate information critically; were better able to refine research topics (4.4/5.0); and they had become better information researchers (4.4/5.0). Figure 4 shows the raw data for the student responses for the question on student perceptions of their ability to use electronic databases, and shows how a greater percentage of student responses tended towards agreement with the statement after the course.

Figure 4. Graph of pre- and post-course survey results: I use electronic databases effectively… The average response based on a coding of the categories on a scale from 5 (strongly agree) to 1 (strongly disagree) was 4.0 before the course, and 4.3 after the course.

Student free-form responses help place these quantitative results in a broader context. The extensive written comments were almost entirely positive. A number of students noted that they already had some instruction in information literacy, but generally these students also suggested the additional instruction was valuable. Several students noted the values of RefWorks as a tool for using and organizing references, one going as far as to refer to it as a life-changing tool. One student referred to citation searching as "sweet."

In summary, the qualitative evaluation suggests the students' information literacy improved notably, and they gained increased sensitivity towards issues such as plagiarism. From the point of view of Warner, the instructor, the student's enthusiasm and engagement in both in-class after after-class discussions was an affirming experience. The instructor also noted a qualitative difference in the sophistication of the student term-papers, which tended to draw on a wider and more appropriate literature than in previous years. This benefit, of course, has to be weighed against the substantial commitment of class and laboratory time to information literacy, as well as the additional burden on the time of the librarian. It is therefore important to note that one student complained in the university end-of-semester course evaluation that the information literacy content took away from time that should have been spent on the nominal course topic of remote sensing. On the other hand, this complaint should be offset against the observation that, overall, the evaluations showed a striking increase. For example, over the previous decade, student evaluation of their learning in the remote sensing course averaged 4.53 (on a 1-5 scale, with 1 being poor and 5 excellent), with a maximum value during that decade of 4.68. In 2009, the year the information literacy content was included, the student response averaged 4.92. Thus, students clearly felt an increased sense of benefit from the course as a whole compared to previous years, although it cannot be proved that this increase resulted specifically from the information literacy content.

Conclusions

The most valuable lesson that we, as collaborators, have learned from the Information Literacy Course Enhancement Project, is that it is not enough for a librarian or a faculty member to be committed to the importance of information literacy skills in the academic success of students. Both librarians and teaching faculty must have a good understanding of information literacy and the value of incorporating it into the curriculum. This is particularly important as changing technology has altered the landscape for information presentation and retrieval. The WVU Libraries demonstrated their commitment by offering grants to faculty interested in exploring making information literacy a part of their teaching. The positive experience of teaching information literacy has strengthened our resolve to incorporate this topic in other classes we teach.

Regarding the benefit to students, their response to information literacy in this course was overwhelmingly positive in both the quantitative and qualitative evaluations. In particular, the instruction appears to have changed the student's approach towards the University's paid Internet resources. The most successful aspects of the instruction appear to be associated with the use of on-line databases and tools, especially the use of advanced search methods and RefWorks. The plagiarism instruction appears to have had the worthwhile effect of increasing students' awareness of the potential pitfalls associated with plagiarism. The effort to increase students' critical skills in web site evaluation appears to have had the least effect on the students, although it is perhaps significant that the proportion of students who began scholarly searches with general Internet search tools declined notably by the end of the course.

The University-wide information literacy grant program culminated in a display of posters from each of the five faculty/librarian teams. The posters were a part of the Provost's annual Faculty Academy and provided a venue for other faculty members to see the successes and lessons learned of the grant winners' collaborations. The faculty and Provost also got to see a presentation on the success at integrating information literacy across the curriculum at Smith College. Our experience was in the first year of the grants. At the time of this writing, the second year has been funded and six other teams are in the process of introducing information literacy to their students. The Director of Instruction and Information Literacy is serving on the current WVU planning committee, so it is hoped that the number of courses offering information literacy as a key component will continue to grow.

References

Association of College and Research Libraries. 2000. Information Literacy Competency Standards for Higher Education [Internet]. [Cited 2010 July 30] Available from: {http://www.ala.org/acrl/standards/informationliteracycompetency}.

Avery, E.F., editor. 2003. Assessing Student Learning Outcomes for Information Literacy Instruction in Academic Institutions. Chicago: Association of College and Research Libraries.

Barclay, D.A. 2003. Teaching and Marketing Electronic Information Literacy Programs: A How-To-Do-It Manual for Librarians. New York: Neal-Schuman.

Burkhardt, J.M. et al. 2003. Teaching Information Literacy: 35 Practical, Standards-Based Exercises for College Students. Chicago: American Library Association.

Comer, C. 2003. Assessing student learning outcomes in sociology. In: Avery, E.F., editor. 2003. Assessing Student Learning Outcomes for Information Literacy Instruction in Academic Institutions. Chicago: Association of College and Research Libraries. p. 101-102.

Cox, N.C. 2008. Information Literacy Instruction Handbook. Chicago: Association of College and Research Libraries.

DeChambeau, A.L. and Sasowsky, I.D. 2003. Using information literacy standards to improve geoscience courses. Journal of Geoscience Education 51(5):490-5.

Kimsey, M.B. and Cameron, S.L. 2005. Teaching and assessing information literacy in a geography program. Journal of Geography 104:17-23.

Radcliff, C.J., Jensen, M.L., Salem, J.A., Burhanna, K.J., and Gedeon, J.A. 2007. A Practical Guide to Information Literacy Assessment for Academic Librarians. Westport, CT: Libraries Unlimited.

Ragains, P. 2006. Information Literacy Instruction That Works: a Guide to Teaching by Discipline and Student Population. New York: Neal-Schuman.

Schroeder, R. 2004. The Developmental Information Literacy Matrix [Internet]. [Cited 2010 July 30] Available from: {http://www.lib.pdx.edu/instruction/Matrix%20v10.doc}.

West Virginia University. 2005. West Virginia University's 2010 Plan: Building the Foundation for Academic Excellence [Internet]. [Cited 2010 October 1] Available from: {https://web.archive.org/web/20160519003641/http://www.wvu.edu:80/~2010plan/documents/2010plan.pdf}.

| Previous | Contents | Next |