| URLs in this document have been updated. Links enclosed in {curly brackets} have been changed. If a replacement link was located, the new URL was added and the link is active; if a new site could not be identified, the broken link was removed. |

The Impact of Data Source on the Ranking of Computer Scientists Based on Citation Indicators: A Comparison of Web of Science and Scopus

Science and Engineering Libraries

University of Saskatchewan

Saskatoon, Saskatchewan

li.zhang@usask.ca

Abstract

Conference proceedings represent a large part of the literature in computer science. Two Conference Proceedings Citation Index databases were merged with Web of Science in 2008, but very few studies have been conducted to evaluate the effect of that merger of databases on citation indicators in computer science in comparison to other databases. This study explores whether or not the addition of the Conference Proceedings Citation Indexes to Web of Science has changed the citation analysis results when compared to Scopus. It compares the citation data of 25 randomly selected computer science faculty in Canadian universities in Web of Science (with Conference Proceedings Citation Indexes) and Scopus. The results show that Scopus retrieved considerably more publications including conference proceedings and journal articles. Scopus also generated higher citation counts and h-index than Web of Science in this field, though relative citation rankings from the two databases were similar. It is suggested that either database could be used if a relative ranking is sought. If the purpose is to find a more complete or higher value of citation counting or h-index, Scopus is preferable. It should be noted that no matter which source is used, citation analysis as a tool for research performance assessment must be constructed and applied with caution because of its technological and methodological limitations.

Introduction

Citation analysis has become one of the assessment tools widely used by academic institutions and policy makers to make important decisions including grant awards, tenure and promotion, funding allocation, and program evaluation. Academic librarians, as the information facilitators on campus, are often asked to provide citation data to directly assist administrators in their decision-making. They are also asked to deliver instructional sessions on conducting citation searching to faculty and researchers. The basic assumption of citation analysis is that the more citations a publication receives, the more influential it is. Aggregated at different levels, citations will reflect the relative impact or quality of a research work, an author, an institution, or a country.

Several factors have contributed to the popularity of citation analysis: 1) The shortcomings and weaknesses of the peer-review process of research performance call for a more objective evaluation method (Cole et al. 1981; Smith 1988; Horrobin 1990); 2) Bibliometric indicators can quantify research performance creating competition among academic institutions and possibly improving their efficiency (Butler 2007; Moed 2007); and 3) the availability of several citation databases such as Web of Science and Scopus makes collecting citation data seemingly easy.

Using citation analysis as a tool for research performance evaluation has been a controversial topic, and plenty has been written in this regard. The following are a few technical problems associated with citation analysis. First, the quality, reliability, and validity of citation analysis greatly depend on interpretation of the data source used. Each citation database has different coverage in terms of publication type, disciplines, language, and time span. Depending on the database, bibliometric measurements may introduce bias towards authors, journals, disciplines, institutions, and countries (Jacso 2010; Weingart 2005). Second, databases contain a large volume of records, and errors and/or inconsistency in the original documents or generated in the indexing process are common (Van Raan 2005). If these problems are not addressed during the search process, the accuracy and rigor of the results will be questionable. Third, occasionally citations may be under-represented because the parsing technology of a database is not advanced enough to identify all the matching citations even though the citing publication and the cited publication are both indexed in the same database (De Sutter and Van Den Oord 2012). Other problems are related to the methodological design of bibliometrics. For example, citation analysis assigns the same weight to each citation regardless of the reasons why a paper is cited - positive, negative or perfunctory (Case and Higgins 2000; Cronin 2000). Different disciplines have developed different citation practices; it is not appropriate to compare citation data across disciplines (Weingart 2005). In addition, bibliometric measurements can only be applied on a high level of aggregation (Van Raan 2005). They are more reliable for analyzing large research teams and less reliable for small research teams. Because of these technological and methodological limitations, citation analysis must be constructed, interpreted, and applied with caution.

Currently, quite a few databases/sources provide citation data, and these include Web of Science, Scopus, Google Scholar, CiteSeerx, ACM Digital Library, etc. When conducting a citation analysis, the first choice librarians have to make is which database/source(s) is appropriate for the analysis. We need to know the strengths and weaknesses of each data source. Among all the data sources available for citation studies, Web of Science and Scopus are the most widely used in academic institutions. Web of Science is known for its selectivity. Until September 2008, it mainly indexed publications in core journals in science, arts & humanities, and social science fields. In contrast, Scopus promotes its inclusivity, and it particularly highlights its coverage of conference papers in the fields of engineering, computer science, and physics (Elsevier [updated 2013]). In September 2008, Web of Science merged its two proceedings citation databases, Conference Proceedings Citation Index - Science (CPCI-S) and Conference Proceedings Citation Index - Social Sciences & Humanities (CPCI-SSH) into Web of Science and created a much larger database. (Individual institutions still have the option to subscribe to Web of Science with or without Conference Proceedings Citation Indexes). This change is of special importance to those fields where conference proceedings play a crucial role in scholarly communication, such as computer science. Although many studies have compared the coverage, features, and citation indicators between Web of Science and Scopus in various disciplines, very few have been carried out after the two conference proceedings citation databases were added to Web of Science in computer science. This study explores whether or not the addition of CPCI-S and CPCI-SSH to Web of Science has changed the citation analysis results when compared to Scopus.

Literature Review

Two citation indicators, citation counting and h-index, are widely used in bibliometric studies. Citation counting, first introduced by Eugene Garfield (1955), is used to assess the quality/impact of a researcher's publications. One of the main weaknesses of citation counting is that an author's citation count can easily be affected by a single highly cited paper or by a large number of publications with few citations each; thus it is hard to evaluate the quality and productivity of works of a researcher at the same time. To complement citation counting, a relatively new measurement h-index was introduced. It attempts to assess both the productivity and the impact of the published works of a researcher simultaneously (Hirsch 2005). A researcher's h-index is h if h of his publications have been cited at least h times. For example, if a researcher published 20 works, and 10 of which were cited at least 10 times, his h-index would be 10.

Numerous studies have been conducted to explore the impact of data source on citation indicators in various research fields, and the results were mixed. For the purpose of this study, the review of the literature will focus on publications discussing the effects of data sources on citation indicators in the field of computer science.

Computer scientists have developed citation patterns unique to their discipline. In the computer science field, conference publications represent a large body of the literature, and are generally considered as important as journal publications. Conference publications and journal publications differ by several characteristics. First, research results are usually published faster in conference proceedings than in traditional journals; the increased speed accommodates researchers in the rapidly developing field of computer science (Wainer et al. 2010). Second, the dynamic features of computer science research, e.g., research results on interactive media, may be unsuitable for the standard format of journal publications (Wainer et al. 2010). Third, the face-to-face interactions among researchers in conference venues can stimulate discussions, allowing researchers to seek peer expertise and receive immediate feedback. Research showed that about 20% of the references in computer science publications were made to conference proceedings, a percentage much higher than in other science fields (Usée et al. 2008). Because of literature composition in computer science, the coverage of conference proceedings must be considered when selecting a data source for bibliometric analysis.

More than a decade ago, a few researchers noticed the lack of conference proceedings coverage in ISI Science Citation Index (SCI, now part of Web of Science), and explored the possibility of using web-based data sources, in addition to SCI, to conduct citation analysis in computer science. Goodrum et al (2001) compared highly cited works in computer science in SCI and CiteSeer (now called CiteSeerx). They found that the major distinction between the two data sources was the prevalence of citations to conference proceedings: 15% of the most highly cited works were conference proceedings in CiteSeer while only 3% were conference proceedings in SCI. Zhao and Logan (2002) compared the citation results for works in XML (Extensible Markup Language), a sub-field of computer science, from SCI and ResearchIndex.com, a web tool that automatically indexed research papers on the web (it no longer exists). They concluded that, while citation analysis based on a web data source had both advantages and disadvantages when compared to SCI, it was a valid method for evaluating scholarly communications in the area.

Bar-Ilan (2006) conducted an egocentric citation and reference analysis of the works of mathematician and computer scientist Michel O. Rabin in CiteSeer, Google Scholar, and Web of Science. She found that the results from the three data sources were considerably different because of the different collection and indexing policies.

Meho and Rogers (2008) examined the publications of 22 top human-computer interaction (HCI) scientists in a British research project, and compared the citation counting, citation ranking, and h-index of these scientists from Web of Science and Scopus. They found that Scopus provided significantly more comprehensive coverage of publications mainly because of the indexing of conference proceedings, and thus generated more complete citation counts and higher h scores for these scholars than Web of Science. They concluded that Scopus could be used as the sole data source when conducting citation-based research and assessment in HCI. This study was carried out before CPCI-S and CPCI-SSH were merged into Web of Science in 2008.

In his study conducted in 2008, Franceschet (2010) compared the publications, citations, and h-index of scholars in the computer science department of a university in Italy from Web of Science and Google Scholar. The results showed that the differences between the two data sources were significant: Google Scholar identified five times the publications, eight times the citations, and three times h-index of Web of Science. Nonetheless, the citation ranking and the h-index ranking of the Italian computer scientists were correlated. The study also identified that the consistency and accuracy of data in Google Scholar were much lower than those in Web of Science and other commercial databases.

Moed and Visser (2007) conducted an extensive pilot study to explore the importance of expanding Web of Science with conference proceeding publications for Dutch computer scientists in 2007. In their study, the conference publications from Lecture Notes in Computer Science (LNSC), ACM Digital Library, and IEEE Library were added to Web of Science, and an expanded database was created for bibliometric analysis. They found that, compared to Web of Science alone, the expanded database increased the publication coverage from 25%-35% for the Dutch computer scientists. Although conference proceedings volumes were more highly cited and also more poorly cited than regular journal volumes, they also found that citation links among recurring conference proceedings were statistically similar to those in journals.

Limited studies have been conducted after the merging of CPCI-S and CPCI_SSH to Web of Science. Judit Bar-Ilan (2010) examined the effects of the addition of CPCI-S and CPCI_SSH to Web of Science in 2010. She compared the publications and the citations of 47 highly cited computer scientists in Web of Science with and without CPCI-S and CPCI-SSH. She found that the average number of publications for each scientist increased by 39% with the addition of the two conference proceedings databases. In terms of document type, she found that 39% were proceedings papers, and 52% were journal articles when the proceedings citation databases were included. When the proceedings citation databases were excluded, only 19% were proceedings papers while 68% were journal articles. The study results also showed a considerable increase in citation counts with the addition of the two proceedings citation databases. Bar-Ilan further calls for more studies to compare the effects of conference publications in Scopus and Web of Science with CPCI-S and CPCI-SSH.

In 2011, Wainer et al. (2011) compared missed publications from 50 computer scientists in the United States in Web of Science with conference proceedings and Scopus. Though they did not provide direct data on the comparison of publication coverage between Web of Science and Scopus, we were able to derive the data from the information included in their paper. It was found that Scopus included 24% more publications than Web of Science for the US computer scientists. Wainer's study did not provide information on citation counts or h-index.

Most recently, De Sutter and Van Den Oord (2012) analyzed the publications of three computer scientists in a Belgian university from Web of Science, Scopus, ACM, Google Scholar, and CiteSeerx in 2010. In contrast to the above mentioned study by Wainer et al., they found that the coverage of publications in Web of Science was comparable to that in Scopus probably due to the addition of CPCI-S and CPCI-SSH. The results also demonstrated that, while the citation counts from Scopus were 27% higher on average than that from Web of Science, the h-index and its ranking were similar in the two databases for the three scientists.

Objectives

The above studies demonstrate that paucity of coverage of conference proceedings in Web of Science has been of great concern to information professionals when conducting citation analysis in computer science. Many of these studies were carried out before CPCI-S and CPCI-SSH were added to Web of Science, except the last three studies published in 2010 or later. But a few points should be noted in the last three studies. Bar-Ilan only compared the results in Web of Science with and without the proceedings databases, and did not compare with other data sources. Sutter's study was based on a small sample from a European university; Wainer's study was based on the publications from US computer scientists and did not evaluate the impact of the change on citation indicators. The results from the last two studies were not consistent. Publication patterns may vary by countries because different award-and-evaluation policies of each country may directly affect researchers' decisions on where and how to publish research results (Wainer et al. 2011). It is necessary that a variety of samples from different countries be studied in order to have a more complete understanding of the impact of the addition of CPCI-S and CPCI-SSH to Web of Science on citation analysis in comparison to other data sources.

In the current study, we explore the citation data of computer scientists from Canadian universities. To our knowledge, none of the existing studies have been concentrated in this research area in Canada. More specifically, we compare the citation results of Canadian computer scientists in Web of Science with Conference Proceedings Citation Indexes and Scopus because many Canadian university libraries subscribe to the two citation databases. The following questions will be investigated:

- Are there coverage differences, for example, number of publications and document types, in Web of Science with Conference Proceedings Citation Indexes and Scopus in computer science field?

- What is the impact of using each of the two citation databases on the citation counting, h-index, and ranking by citation of researchers in computer science?

Methods

A list of faculty members in computer science departments, schools, or equivalent from 15 Canadian medical doctoral universities was compiled from their web sites. In Canada, universities are categorized into three types by Maclean's University Rankings: medical doctoral university, comprehensive university, and primarily undergraduate university (University Rankings [updated 2013]). There are 15 medical doctoral universities in Canada. They offer a broad range of PhD programs, and are widely recognized as the most research intensive universities in Canada. We believe that the publications of the faculty members in computer science in the medical doctoral universities will fairly represent the research activities in this field in Canada. For the purpose of this study, only regular faculty with position title as assistant professor, associate professor, or professor were included. Faculty members with status as professor emeritus, instructor, lecturer, adjunct faculty or cross-appointed faculty were excluded from this study. Of the 496 included faculty members, a sample of 25 faculty members was selected by random number generation. For each of the 25 sample faculty, Web of Science with CPCI-S and CPCI-SSH and Scopus, respectively, were searched to identify his/her publications.

In Web of Science, the process of searching for publications by an author was somewhat complicated. The default "Search" function was used to identify publications by an author. Because Web of Science only indexes author's last name, initial and/or middle initial, it is difficult to ascertain if the publications identified were indeed the works by the author searched. For example, Karen Singh and Kevin Singh would be indexed under the same name "Singh K"; searching author field by "Singh K" would retrieve many irrelevant results. This is particularly problematic when searching for a common author name. We searched both the "Author" field and the "Enhanced Organization" field in order to identify the publications of the intended author and to reduce false results. Because authors frequently list their names slightly differently in publications, we searched all possible name variations. For example, for author "Oliver W W Yang", we used the search string "Yang O OR Yang OW OR Yang OWW". The results were manually screened to make sure the publications were the works by the intended author. If uncertain, we filtered the results to include only computer science-related publications by using the Web of Science categories: Computer Science OR Engineering, Electrical & Electronic OR Mathematics, Applied OR Telecommunications OR Mathematical & Computational Biology.

In Scopus, the "Author Search" function was used to identify the publications by a specific researcher by searching for last name, first name, and affiliation. Scopus assigns each author an Author ID, and automatically searches for name variations, which made the search relatively straightforward. There were a few cases where the same author was listed with a different Author ID. These cases were further examined to find out whether or not they were the author of interest, and if confirmed, the publications under different Author IDs were merged.

The publication list of each researcher was downloaded from both Web of Science and Scopus, and the number of publications, document types (journal articles, conference proceedings, reviews, editorial, etc.), citation counts, and h-index for each researcher from each database were recorded. Neither Web of Science nor Scopus assigns a document type for article-in-press, thus we assigned a document type for these publications upon examination. It should be noted that, in Web of Science, a considerable number of publications were categorized as both journal articles and conference proceedings, many of which were published in Lecture Notes series and IEEE Transactions series. In this case, each paper was examined and assigned only one document type based on the author's judgment. There were cases where a same publication was indexed twice in a database, and the duplicate records were removed.

The data collection was performed from January - March of 2013.

Results

Table 1 lists the name, university and position of the 25 randomly selected samples from the 496 faculty members. Of the 25 selected faculty members, 16 were professors, six were associate professors, and three faculty member's positions were not publicly available (Table 1). The three members were all from Dalhousie University. This institution did not provide faculty position details on the web site, but it is believed they met the inclusion criteria because they were listed in the regular faculty category.

| Table 1. Randomly selected sample of 25 faculty members | ||

|---|---|---|

| Faculty | University | Position |

Abidi, Raza |

Dalhousie University |

N/A |

Aboulhamid, El Mostapha |

University of Montreal |

Professor |

Adams, Carlisle |

University of Ottawa |

Professor |

Aïmeur, Esma |

University of Montreal |

Professor |

Bengio, Yoshua |

University of Montreal |

Professor |

Boyer, Michel |

University of Montreal |

Associate Professor |

Cook, Stephen |

University of Toronto |

Professor |

De Freitas, Nando |

University of British Columbia |

Professor |

Hayden, Patrick |

McGill University |

Associate Professor |

Heidrich, Wolfgang |

University of British Columbia |

Professor |

Hu, Alan |

University of British Columbia |

Professor |

Matwin, Stan |

Dalhousie University |

N/A |

McCalla, Gord |

University of Saskatchewan |

Professor |

Safavi-Naini, Rei |

University of Calgary |

Professor |

Sampalli, Srinivas |

Dalhousie University |

Professor |

Schneider, Kevin A. |

University of Saskatchewan |

Professor |

Sekerinski, Emil |

McMaster University |

Associate Professor |

Singh, Karan |

University of Toronto |

Professor |

Stanley, Kevin G. |

University of Saskatchewan |

Associate Professor |

Szafron, Duane |

University of Alberta |

Professor |

Tennent, Bob |

Queen's University |

Professor |

Tran, Thomas T. |

University of Ottawa |

Associate Professor |

Watters, Carolyn R. |

Dalhousie University |

N/A |

Wohlstadter, Eric |

University of British Columbia |

Associate Professor |

Yang, Oliver |

University of ottawa |

Professor |

Publications

Figure 1 provides a comparison of the total number of the publications identified in the two databases. A total of 1,089 publications were identified in Web of Science for the 25 faculty members, whereas 1,801 were identified in Scopus; Scopus identified 65% more publications. The overlap between the two databases was 895, and the total number of publications identified through searching the two databases was 1,995. As can be seen in Figure 1, Scopus identified about 90% of the total publications, whereas Web of Science identified about 55%. The unique publications identified by Scopus were 906, or 45% of the total publications. Web of Science identified 194 unique publications, or 10% of the total publications.

Table 2 provides a more detailed comparison of number of publications by document type. Of the 1,089 records identified in Web of Science, 55% were conference proceedings, and 41% were journal articles. In Scopus, 62% were conference proceedings, and 34% were journal articles. The number of conference proceedings identified in Scopus was significantly higher (524 publications or 87% more) than those indexed in Web of Science. Journal article count in Scopus was 37% higher than that in Web of Science. The results show that although the two conference proceedings databases, CPCI-S and CPCI-SSH, have been added to Web of Science since 2008, Scopus still covers significantly more conference proceedings and journal articles in the computer science field. The average number of publications for the Canadian computer science faculty was 43 and 72 in Web of Science and Scopus, respectively.

| Table 2. Total publications identified by document type | ||||||

|---|---|---|---|---|---|---|

Document Type |

Web of Science |

Scopus |

Difference |

|||

# of Publications |

% of Total Publications in Web of Science |

# of Publications |

% of Total Publications in Scopus |

# of Publications |

Percentage ǂ |

|

Conference Proceedings |

601 |

55% |

1,125 |

62% |

524 |

87% |

Journal Articles |

444 |

41% |

608 |

34% |

164 |

37% |

Reviews |

7 |

1% |

23 |

1% |

16 |

229% |

Editorials |

19 |

2% |

25 |

1% |

6 |

32% |

Other* |

18 |

2% |

20 |

1% |

2 |

11% |

Total |

1089 |

100% |

1801 |

100% |

712 |

65% |

Average/faculty |

43 |

N/A |

72 |

N/A |

29 |

67% |

| *Other types of documents include meeting abstracts, short survey, note, letter, conference review, and book chapter. | ||||||

| ||||||

Citation Counting and h-index

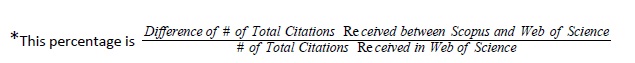

Table 3 shows the comparison of citations received and citation ranking for the 25 sample faculty members in Web of Science and Scopus. A total of 9,217 citations were received in Web of Science, compared to 15,183 in Scopus; Scopus retrieved 65% more citations. This was not surprising because significantly more publications were identified in Scopus. A detailed evaluation of the results found that, in three cases (Cook, McCala, and Tennent), more citations were retrieved from Web of Science than from Scopus. This finding indicates that although more cited references will usually be expected in Scopus at the aggregated level, this is not always the case for individual researchers. In five cases (Singh, Schneider, Safavi-Naini, Adams, Stanley), the number of citations received from Scopus was considerably higher than that from Web of Science (from 394% to 5,132%). Even when these five outliers were excluded, the total number of citations from Scopus was still higher than that from Web of Science (45% more). A Spearman's Rank Order Correlation was calculated to determine the similarity of citation rankings. Spearman's Rank Order Correlation is a nonparametric statistical test to measure the strength of association between two ranked variables. The test result showed that there was a strong, positive correlation between the ranking in Web of Science and that in Scopus (Rs=0.80, p<0.05), meaning that the citation rankings in two databases were similar despite the different citation counts.

| Table 3. Comparison of Citation Counting in Web of Science and Scopus | |||||||

|---|---|---|---|---|---|---|---|

Web of Science |

Scopus |

Difference |

|||||

Author |

# of Total Citations Received |

Ranking |

# of Total Citations Received |

Ranking |

# of Total Citations Received |

Percentage* |

Ranking |

Bengio, Yoshua |

2,072 |

1 |

3,255 |

1 |

1,183 |

57% |

0 |

Cook, Stephen |

1,713 |

2 |

954 |

7 |

-759 |

-44% |

-5 |

De Freitas, Nando |

927 |

3 |

1,451 |

2 |

524 |

57% |

1 |

Szafron, Duane |

694 |

4 |

924 |

8 |

230 |

33% |

-4 |

Matwin, Stan |

531 |

5 |

683 |

9 |

152 |

29% |

-4 |

Heidrich, Wolfgang |

460 |

6 |

1,013 |

5 |

553 |

120% |

1 |

Hayden, Patrick |

447 |

7 |

1,258 |

3 |

811 |

181% |

4 |

Yang, Oliver |

405 |

8 |

1,164 |

4 |

759 |

187% |

4 |

Boyer, Michel |

396 |

9 |

402 |

11 |

6 |

2% |

-2 |

McCalla, Gord |

262 |

10 |

205 |

17 |

-57 |

-22% |

-7 |

Watters, Carolyn R. |

222 |

11 |

366 |

13 |

144 |

65% |

-2 |

Tennent, Bob |

216 |

12 |

128 |

19 |

-88 |

-41% |

-7 |

Hu, Alan |

166 |

13 |

374 |

12 |

208 |

125% |

1 |

Aboulhamid, El Mostapha |

155 |

14 |

347 |

14 |

192 |

124% |

0 |

Singh, Karan |

98 |

15 |

545 |

10 |

447 |

456% |

5 |

Aïmeur, Esma |

96 |

16 |

152 |

18 |

56 |

58% |

-2 |

Abidi, Raza |

80 |

17 |

219 |

16 |

139 |

174% |

1 |

Wohlstadter, Eric |

75 |

18 |

128 |

20 |

53 |

71% |

-2 |

Schneider, Kevin A. |

52 |

19 |

257 |

15 |

205 |

394% |

4 |

Tran, Thomas T. |

50 |

20 |

70 |

23 |

20 |

40% |

-3 |

Sampalli, Srinivas |

34 |

21 |

97 |

22 |

63 |

185% |

-1 |

Sekerinski, Emil |

27 |

22 |

44 |

24 |

17 |

63% |

-2 |

Safavi-Naini, Rei |

19 |

23 |

994 |

6 |

975 |

5,132% |

17 |

Adams, Carlisle |

15 |

24 |

128 |

21 |

113 |

753% |

3 |

Stanley, Kevin G. |

5 |

25 |

25 |

25 |

20 |

400% |

0 |

Total |

9,217 |

N/A |

15,183 |

N/A |

5,966 |

65% |

N/A |

*This percentage is  | |||||||

The h-index of individual researchers is shown in Table 4. The average h-index in Web of Science was 7.12, whereas 9.2 was found in Scopus. The highest h-index was 19 from Web of Science, and 22 from Scopus. The average difference of h-index was 2.1. In order to determine if the difference was statistically significant, a paired t-test was performed. This test is used to compare the means of two variables for a single group. The test results proved that the difference was significant (t=2.3, df=24, p=0.03). We also ran a Spearman's Rank Order Correlation test to determine if the h-index ranking was similar in two databases. The results showed that the correlation between the two rankings was strong (Rs=0.73, P<0.01), indicating that the h-index ranking was similar although the h-index was different in the two databases.

| Table 4. Comparison of h-index in Web of Science and Scopus | |||||||

|---|---|---|---|---|---|---|---|

Author |

h-index |

h-index |

Difference |

||||

Cook, Stephen |

19 |

8 |

-11 |

||||

Bengio, Yoshua |

18 |

22 |

4 |

||||

Szafron, Duane |

12 |

14 |

2 |

||||

Hayden, Patrick |

11 |

18 |

7 |

||||

Heidrich, Wolfgang |

11 |

17 |

6 |

||||

Yang, Oliver |

10 |

17 |

7 |

||||

Matwin, Stan |

9 |

8 |

-1 |

||||

McCalla, Gord |

9 |

5 |

-4 |

||||

Boyer, Michel |

8 |

7 |

-1 |

||||

De Freitas, Nando |

8 |

12 |

4 |

||||

Watters, Carolyn R. |

8 |

9 |

1 |

||||

Hu, Alan |

7 |

12 |

5 |

||||

Tennent, Bob |

7 |

3 |

-4 |

||||

Singh, Karan |

6 |

12 |

6 |

||||

Aboulhamid, El Mostapha |

6 |

9 |

3 |

||||

Abidi, Raza |

5 |

7 |

2 |

||||

Aïmeur, Esma |

5 |

6 |

1 |

||||

Wohlstadter, Eric |

4 |

5 |

1 |

||||

Safavi-Naini, Rei |

3 |

16 |

13 |

||||

Sekerinski, Emil |

3 |

3 |

0 |

||||

Schneider, Kevin A. |

3 |

6 |

3 |

||||

Tran, Thomas T. |

2 |

4 |

2 |

||||

Adams, Carlisle |

2 |

4 |

2 |

||||

Sampalli, Srinivas |

1 |

3 |

2 |

||||

Stanley, Kevin G. |

1 |

3 |

2 |

||||

Average |

7.12 |

9.2 |

2.08 |

||||

SD |

4.7 |

5.5 |

0.9 |

||||

Discussion

The environment academic librarians work in is constantly changing; the services we provide need to reflect changes in order to put libraries in an indispensable position within their parent institutions. The involvement of librarians in research impact assessment, such as the use of bibliometrics is one of the opportunities for academic libraries to grow with their organizations (Feltes et al 2012). Librarians' value in research assessment is not only to provide data of bibliometric indicators but also to highlight the strengths and weaknesses of these tools in order for administrators to make an informed decision. When undertaking these tasks, there are a few key points we need to bear in mind in order to ensure the quality of such analysis. For example, which data source(s) is most appropriate for a particular discipline? What are the special techniques required in conducting bibliometric analysis? What are the limitations associated with bibliometric analysis? This paper explores the impact of two data sources, namely Web of Science and Scopus, on citation analysis, using Canadian computer scientists as a case study.

In the culture of computer science publication, conference proceedings are a favourite venue for publishing original research and often final research. When conducting bibliometric analysis in this field, the coverage of conference proceedings in a data source should be one of the important factors to be considered. This study is the first attempt at targeting computer scientists in Canadian universities, trying to explore whether or not the addition of CPCI-S and CPCI-SSH has changed the citation results of Web of Science in comparison to Scopus.

Addressing the first research question about coverage differences, the results show that Scopus not only retrieved significantly more total publications (65% more) than Web of Science for computer science researchers in Canadian universities, but also excelled in retrieving every document type: conference proceedings, journal articles, reviews, editorials, and other types. This percentage (65%) is significantly higher than the findings in a previous study of publications by computer scientists in the United States, which indicated that Scopus identified 24% more publications than Web of Science (Wainer et al. 2011). When comparing Web of Science and Scopus, the conference proceedings document type saw the greatest difference. This finding indicates that, even after CPCI-S and CPCI-SSH were added to Web of Science, Scopus still has more comprehensive conference coverage in the field of computer science. Furthermore, Scopus also covered more journal articles than Web of Science in this field. These results suggest that Scopus is more suitable for conducting a publication search for a Canadian researcher in the computer science field.

In general, the 25 faculty members received significantly more citations (65% more) in Scopus than in Web of Science. The citation rankings from the two databases were not significantly different. A similar pattern was also found for the h-index. Although the h-index generated from Scopus was higher than from Web of Science, the ranking of h-index from the two databases was strongly correlated. These findings suggest that either database would be appropriate if a relative ranking, such as citation ranking, is sought. If the purpose is to find a higher value of citation counting or h-index, then Scopus would be preferred. Because citation counting and h-index vary significantly in each database, they should only be compared within a specific database.

Addressing the second research question about impact of the two databases, it was noted that citation counts and the h-index generated from Scopus were not always higher than those from Web of Science for individual researchers. In a few cases, citation counting and the h-index were actually higher from Web of Science than from Scopus. This finding implies that when a group of researchers' citations are analyzed, Scopus alone would be sufficient. When the analysis is for an individual researcher, it may be necessary that both databases be consulted to present a more accurate result of aggregated citation data. Meho and Rogers (2008) found that the aggregated h-index value or ranking from the union of Scopus and Web of Science was not significantly different from those generated from Scopus alone in HCI field (a sub-field of computer science). They suggest that it is not necessary to use both databases to generate an aggregated h-index as this process is extremely time-consuming and labor-intensive. It is unknown whether this finding would apply to the whole field of computer science because study has shown that different computer science sub-fields may have different publication patterns (Wainer at al. 2013). The next step of this research will be to examine how the combined results of Web of Science, Scopus, and maybe other databases such as Google Scholar and CiteSeerx will affect the citation data in the computer science field.

Generation of accurate citation data is factor-dependent. First, librarians and information professionals should be aware of the strengths and weaknesses of the databases in a given subject area, and select the appropriate database(s) for a citation search. Peter Jacso (2011) points out that the significant difference in number of publications, citation counting, and h-index from the databases is because of the different breadth and consistency of source document coverage. Web of Science and Scopus have different policies on inclusion of publications in their indexing. Web of Science indexes core/influential journals and excludes those considered to be non-core journals in a given field. Scopus is intentionally more inclusive. Raw measurements such as publication counts, citation counts, and h-index will naturally be different. Although both databases claim they are multidisciplinary, the depth of the coverage of a subject area is different (Jacso 2009). It is known that Scopus has better coverage in science and technology fields (Jacso 2008). The results of this study confirmed that this was true in the computer science field even after the two Conference Proceedings Citation Index databases were added to Web of Science. The results may not be applicable to other fields such as social sciences and humanities, or even other sciences.

Second, achieving comprehensive citation data greatly depends on the skill level and persistence of the searcher to identify all the variations of author names, the organizations which the authors have worked for, and, sometimes, incorrect names produced by the authors themselves and/or data errors in databases (Jacso 2011). This is particularly an issue of concern for Web of Science because works by the same author are not automatically indexed under the same name abbreviation. For this research project, much effort was spent on identifying name variations by browsing the publications. It is difficult to identify the organizations that authors have worked for because this information is often not publicly available. One extreme example is the case of the author Safavi-Naini; she received 19 citations from Web of Science and 994 from Scopus. This had a significant impact on her ranking by citation counts and h-index. Further examination found that many of her works were published before her current affiliation; searching by the combination of author name and organization fields had missed many of her publications. This example illustrates that citation data in Web of Science could be seriously deflated if detailed author information was not available. Librarians or information professionals should collect detailed information by consulting with authors to achieve a more comprehensive result. Another better approach would be to use an author's publication list to generate the citation numbers if the list is available. But for this study, it was not possible to use this approach because many of the computer scientists did not provide a complete list of their publications on their web sites.

Third, the time permitted to conduct a citation search may affect the quality of the data. In library and information practice, many of the requests for citation searches need to be completed within a short time frame. In this study, it was extremely time consuming to identify the variations of names, to clean the raw data, to purge duplicates, and to recalculate citation counts. Again, Web of Science presents more challenges than Scopus in this regard. It is suggested that when time is limited, Scopus should be the first choice for citation searches in the computer science field. It should be noted that even with Scopus, there are cases that the author search did not retrieve the exact results as expected, though the problem is less prominent. When the value of citation counting and h-index is of most importance, e.g. for tenure, promotion, and grant application, there is no quick search and a reasonable time frame needs to be given to the searcher (Jacso 2011).

This study has a few limitations in addition to the general limitations associated with bibliometric analysis. First, because of time and resource limits for this study, the search for publications of individual researchers was limited to their current organization; the results from Web of Science may be deflated if authors have worked in different organizations. Since the turnover rate of computer science faculty is about 3% most faculty will remain in their organization for long periods of time (Cohoon et al. 2003). It is believed that the results will still be able to provide a snapshot of search results from Web of Science. The vendor of Web of Science, Thomson Reuters, may wish to consider grouping works by the same author together, as this will improve the proficiency and accuracy of the search. Second, there are different ways to identify cited references in the two databases. In Web of Science, the default "Search" function was used by searching "author" field and other fields when necessary as described in the Methods section. Alternatively, one could use "Cited Reference Search" to search for citations of a known publication. In Scopus, "Author search" function was used; another alternative was "Document search" function. It is possible that the citation search results from the other methods may be different even in the same database. Again the "Author search" method was selected due to the limitations of time of this study. Third, the sample size of 25 faculty is small, which may affect the reliability of the research results.

Conclusion

Measuring the research performance of a researcher, a department, an institution, and even a country has become a routine exercise in the academic environment. Two of the available citation indicators used for assessment are citation counting and h-index. Librarians and information professionals taking on these projects need to know the characteristics of the database(s)/data source(s) in order to make informed decisions. This study explored the differences in the coverage by document types, citation counting, h-index, and citation ranking in the computer science field in Canada using Web of Science (with Conference Proceedings Citation Indexes) and Scopus. The results indicate that Scopus has a better coverage of conference proceedings and journal articles in this field than Web of Science even after the two conference proceedings databases, CPCI-S and CPCI-SSH, were added. Scopus also generated higher citation counting and h-index, but citation ranking was similar in both databases. It is concluded that Scopus is a better choice for identifying publications, citations, and h-index in the field of computer science. If the purpose is to find relative ranking, Web of Science could also be used. Further research needs to be conducted to assess the effect of aggregating the results from Web of Science and Scopus, and compare the results with other citation databases such as Google Scholar, CiteSeerx, and ACM Digital Library.

Most importantly, no matter which database(s)/data source(s) are used for conducting bibliometric analysis, the main principles of bibliometrics should be followed (Weingart 2005):

- The search has to "be applied by professional people, trained to deal with the raw data".

- Bibliometric analysis should "only be used in accordance with the established principles of "best practice" of professional bibliometrics (Van Raan 1996)."

- Bibliometric analysis "should only be applied in connection with qualitative peer review, preferably of the people and institution being evaluated." It is as a support tool for peer review, and peer review has to remain the primary process for research assessment. Bibliometric indicators help peer reviewers to make informed decisions.

Acknowledgements

The Author thanks Vicky Duncan and Jill Crowley-Low for valuable feedback.

References

Bar-Ilan, J. 2006. An ego-centric citation analysis of the works of Michael O. Rabin based on multiple citation indexes. Information Processing & Management 42(6):1553-66.

Bar-Ilan, J. 2010. Web of Science with the Conference Proceedings Citation Indexes: The case of computer science. Scientometrics 83(3):809-24.

Butler, L. 2007. Assessing university research: A plea for a balanced approach. Science and Public Policy 34(8):565-74.

Case, D.O. and Higgins, G.M. 2000. How can we investigate citation behavior? A study of reasons for citing literature in communication. Journal of the American Society for Information Science and Technology 51(7):635-45.

Cohoon, J.M., Shwalb, R. and Chen, L.-Y. 2003. Faculty turnover in CS departments. SIGCSE Bulletin 35(1): 108-12

Cole, S., Cole, J.R., and Simon, G.A. 1981. Chance and consensus in peer review. Science 214(4523):881-6.

Cronin, B. 2000. Semiotics and evaluative bibliometrics. Journal of Documentation 56(4):440-53.

De Sutter, B. and Van Den Oord, A. 2012. To be or not to be cited in computer science. Communications of the ACM 55(8):69-75.

Elsevier. Content Overview: Scopus. [Updated 2013]. [Internet] [cited 2013 November 25]. Available from: http://www.elsevier.com/online-tools/scopus/content-overview .

Feltes, C., Gibson, D.S., Miller, H., Norton, C., and Pollock, L. 2012. Envisioning the future of science libraries at academic research institutions. [cited 2013 September 15]. Available from {https://repository.lib.fit.edu/handle/11141/10}

Franceschet, M. 2010. A comparison of bibliometric indicators for computer science scholars and journals on web of science and google scholar. Scientometrics 83(1):243-58.

Garfield, E. 1955. Citation indexes for science - new dimension in documentation through association of ideas. Science 122(3159):108-11.

Goodrum, A., McCain, K., Lawrence, S., and Giles, C. 2001. Scholarly publishing in the Internet age: A citation analysis of computer science literature. Information Processing & Management 37(5):661-75.

Hirsch, J. 2005. An index to quantify an individual's scientific research output. Proceedings of the National Academy of Sciences of the United States of America 102(46):16569-72.

Horrobin, D.F. 1990. The philosophical basis of peer review and the suppression of innovation. Journal of American Medical Association 263(10):1438-41.

Jacso, P. 2008. The pros and cons of computing the h-index using scopus. Online Information Review 32(4):524-35.

Jacso, P. 2009. Database source coverage: Hypes, vital signs and reality checks. Online Information Review 33(5):997-1007.

Jacso, P. 2010. Pragmatic issues in calculating and comparing the quantity and quality of research through rating and ranking of researchers based on peer reviews and bibliometric indicators from Web of Science, Scopus and Google Scholar. Online Information Review 34(6):972-82.

Jacso, P. 2011. The h-index, h-core citation rate and the bibliometric profile of the Scopus database. Online Information Review 35(3):492-501.

Meho, L.I. and Rogers, Y. 2008. Citation counting, citation ranking, and h-index of human-computer interaction researchers: A comparison of scopus and web of science. Journal of the American Society for Information Science and Technology 59(11):1711-26.

Moed, H.F. 2007. The future of research evaluation rests with an intelligent combination of advanced metrics and transparent peer review. Science and Public Policy 34(8):575-83.

Moed, H.F. and Visser, M.S. 2007. Developing bibliometric indicators of research performance in computer science: An exploratory study. CWTS, Leiden. [Internet]. [cited 2013 August 25]. Available from http://www.Cwts.nl/pdf/NWO_Inf_Final_Report_V_210207.Pdf

Smith, R. 1988. Problems with peer review and alternatives. British Medical Journal 296(6624):774-7.

University Rankings. [Updated 2013]. [Internet]. Macleans. [cited 2013 May 02]. Available from: http://oncampus.macleans.ca/education/rankings/

Usée, C., Larivière, V., and Archambault, É. 2008. Conference proceedings as a source of scientific information: A bibliometric analysis. Journal of the American Society for Information Science and Technology 59(11):1776-84.

Van Raan, A.F. 1996. Advanced bibliometric methods as quantitative core of peer review based evaluation and foresight exercises. Scientometrics 36(3):397-420.

Van Raan, A.F.J. 2005. Fatal attraction: Conceptual and methodological problems in the ranking of universities by bibliometric methods. Scientometrics 62(1):133-43.

Wainer, J., Przibisczki De Oliveira, H., and Anido, R. 2010. Patterns of bibliographic references in the ACM published papers. Information Processing and Management 47(1):135-42.

Wainer, J., Billa, C. and Goldenstein, S. 2011. Invisible work in standard bibliometric evaluation of computer science. Communications of the ACM 54(5):141-6.

Wainer, J., Eckmann, M., Goldenstein, S., and Rocha, A. 2013. How productivity and impact differ across computer science subareas: How to understand evaluation criteria for CS researchers. Communications of the ACM 56(8):67-73.

Weingart, P. 2005. Impact of bibliometrics upon the science system: Inadvertent consequences? Scientometrics 62(1):117-31.

Zhao, D. and Logan, E. 2002. Citation analysis using scientific publications on the web as data source: A case study in the XML research area. Scientometrics 54(3):449-72.

| Previous | Contents | Next |

This work is licensed under a Creative Commons Attribution 4.0 International License.